The dark side of AI technologies has raised serious concerns for governance in an age of rapid technological development. Concerns over the integrity of the political process are raised by the widespread use of lifelike phony photos, audio snippets, and films as the general election of 2024 draws near.

Before the New Hampshire primary, a disturbing incident unfolded as a robocall impersonating President Joe Biden urged voters to abstain from casting their ballots. The deceptive message played on voters’ fears, asserting that their vote held more significance in the upcoming November election. The origins and extent of this manipulative tactic remain unclear, as such calls can be easily disseminated through purchased phone number lists.

This incident and the surge in deepfake technology have set off alarm bells among election officials nationwide. Nevada Senator Catherine Cortez Masto, acknowledging the threat posed by AI and other technologies, emphasizes the urgency to combat the spread of disinformation, particularly in the context of elections. In a statement to KOLO 8 News Now, she underscores the need for responsible growth in emerging AI and technology industries.

Regulatory void and big tech influence

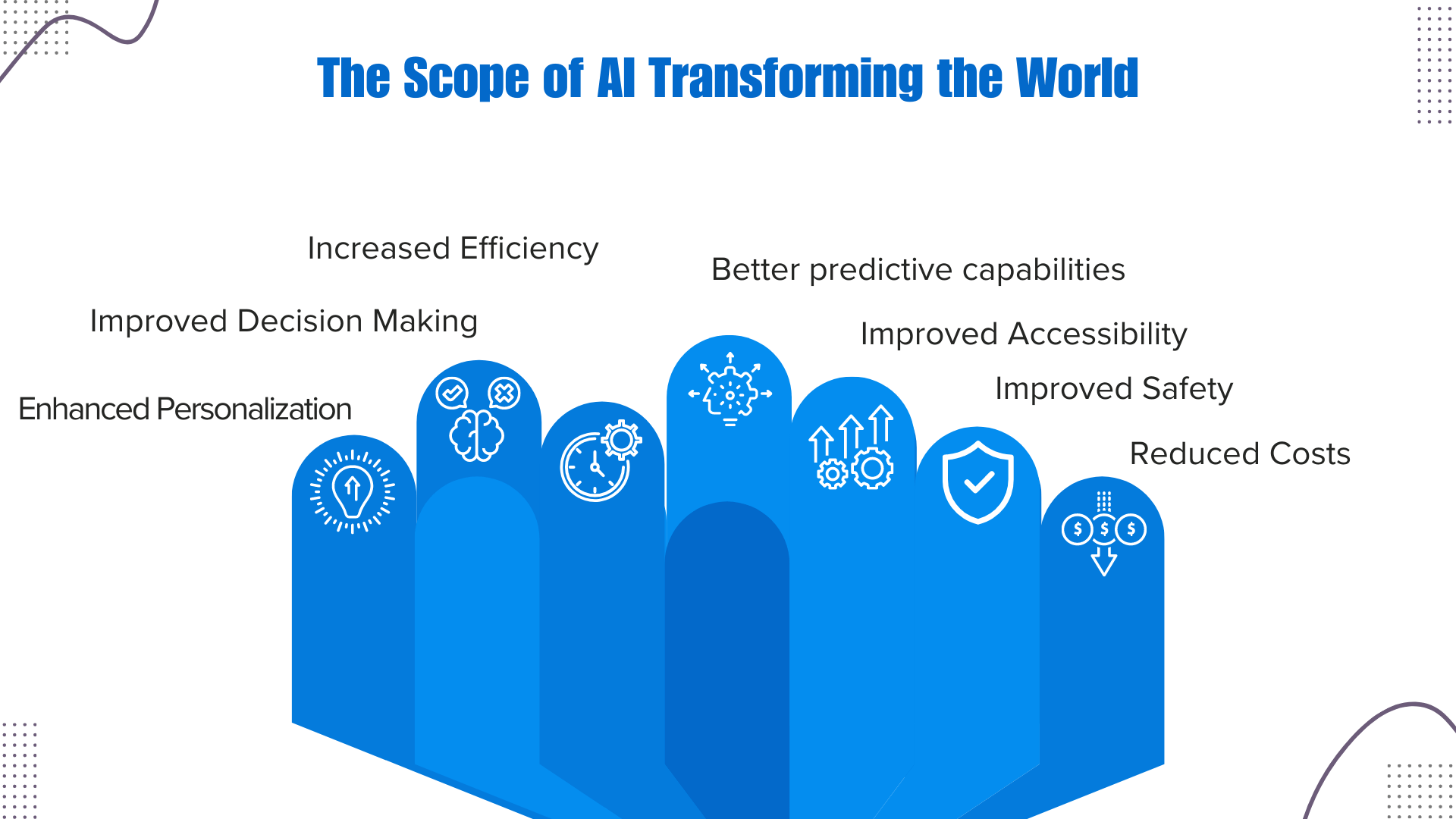

Despite growing concerns, the current landscape lacks federal regulations to address the misuse of AI in political contexts. Proposals are in the works, but engineers like Gal Ratner argue against regulating the internet altogether. Ratner contends that the issue lies not in technology but in who wields its power. Concerns arise that, if regulated, influential Big Tech lobbyists may shape regulations to their advantage. Meta’s lead AI executive recently accused Google of extensive corporate lobbying to influence global regulations.

In the absence of immediate regulations, Ratner warns the public about treating their phones as potential weapons. He argues that the root of the problem is not AI but rather a lack of education. According to Ratner, the transparency brought by the internet has magnified pre-existing issues of espionage and disinformation, making it imperative for individuals to discern the authenticity of digital content.

As the AI genie remains outside the bottle, any regulatory measures might not materialize before the 2024 elections. Ratner suggests adopting an old-school approach to tackle the challenges posed by AI-generated misinformation. This includes employing reverse image searches to trace the origins of images, verifying the legitimacy of content sources, and conducting simple story verifications by reaching out to individuals involved in the content.

In Ratner’s perspective, AI is not the primary concern; rather, he emphasizes the importance of education to equip individuals to navigate this era of digital deception. He underscores the need for heightened vigilance, reminding everyone that in today’s world, everything seen and heard may be subject to manipulation.

Navigating the AI minefield

As the specter of AI-generated misinformation looms, the 2024 elections face unprecedented challenges. The incident in New Hampshire is a stark reminder of the potential misuse of technology to manipulate public opinion. With no immediate federal regulations, the responsibility falls on individuals to employ vigilant and discerning approaches in navigating this AI minefield.

In a world of increasingly elusive authenticity, the call for an old-school mindset gains prominence. The public is urged to adopt proactive measures, from verifying content sources to embracing skepticism in the face of digital content. As technology advances, the battle against AI-generated disinformation becomes a collective effort to safeguard the foundations of democracy.