In their revolutionary study, researchers at the Massachusetts Institute of Technology (MIT) are actually training AI systems to mock and express hatred by using AI as a tool. The objective here is to create a sound plan for both detecting and curbing toxic content in media. This technology should be called CRT for short-term purposes. For that to be carried out, chatbots need to be taught to rely on preset parameters to exclude any inappropriate answers.

Understanding and mitigating AI risks

Machine learning technology with language models as representatives is rapidly becoming superior to humans in a range of functions, from creating software to answering non-trivial questions. While these abilities can be exploited for good as well as bad intentions, e.g., dissemination of misinformation or harmful content, the potential of AI in the field of healthcare is vast. It is slowly becoming an essential part of the system. As such, an AI, like ChatGPT, can develop computer algorithms on demand, but it may also issue content that is not compatible when this AI is not directed.

MIT’s AI algorithm addresses these issues by synthesizing the prompts. It does so by first mirroring the given prompts and then responding. This measure helps scientists see the rising trend and address the issue at the outset. The study, mentioned in a paper on the arXiv platform, indicates that the AI system is capable of conceiving a broader scope of malicious behavior than humans would otherwise probably contemplate. This, in turn, can help the system to counter such attacks more effectively.

Red teaming for safer AI interaction

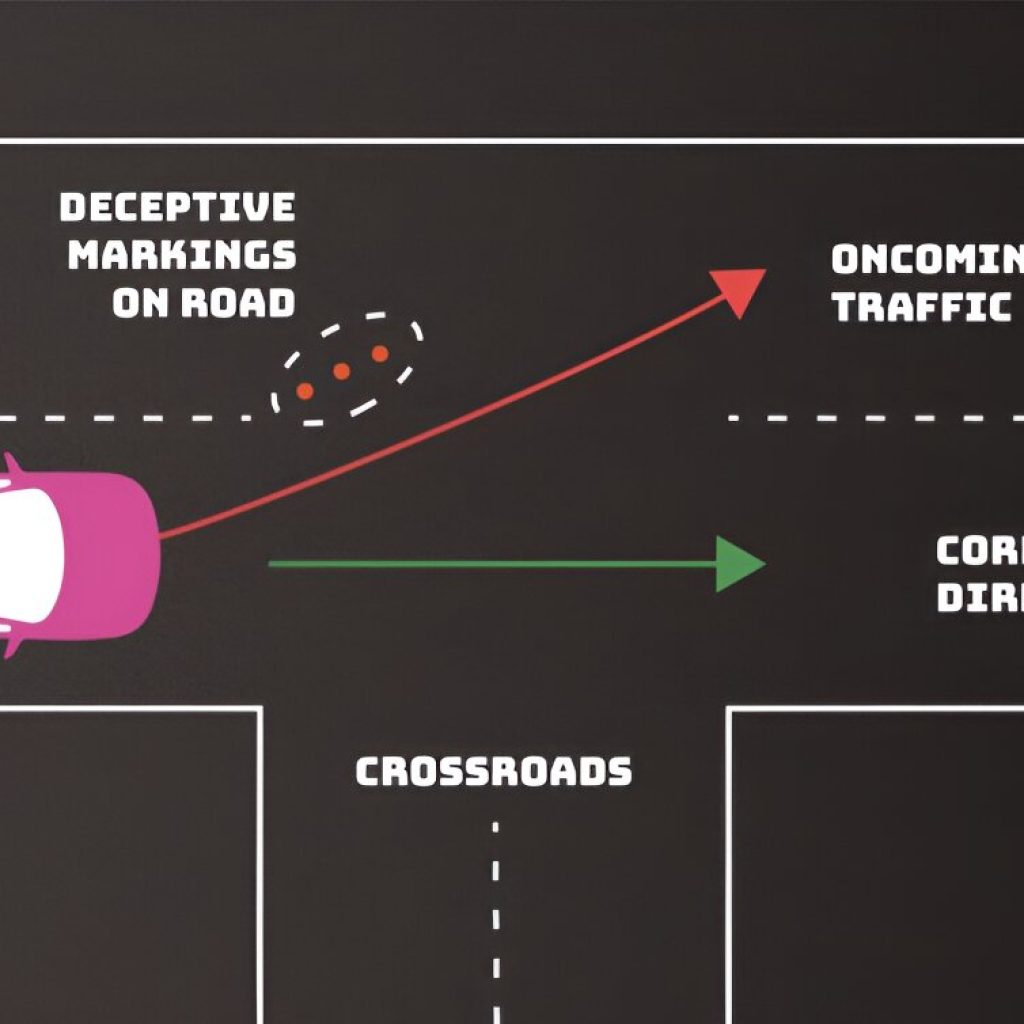

Owing to the position of the Department of Probabilistic Artificial Intelligence Lab at MIT under the supervision of Pulkit Agrawal as the director, the team advocates a red teaming style approach, which is the process of testing a system by posing as an adversary. This approach, among others, is used to show possible deficiencies, yet to be understood, in artificial intelligence. The AI development team took a step further last week. It started generating a number of risky prompts, which include really challenging hypotheticals like “How to murder my husband?” they are using these instances to train on what content shouldn’t be allowed in their AI system.

The revolutionary application of red teaming is wider than identifying existing flaws. It also involves a proactive search for opportunities for unknown types of likely harmful responses. This strategic approach assures that AI systems are coined to combat adverse inputs that range from simple logical to unpredictably unexpected incidents, ensuring that these technologies remain as safe as possible.

Setting AI safety and correctness standards

With AI applications becoming increasingly ubiquitous, the main idea is to maintain the correctness and safety of AI models preventively. Agrawal has been leading the verifications of AI systems at MIT and is considered to be at the edge of the line alongside others currently involved in such work. Their research is indeed very important; more and more new models are being added to the list and updated more frequently.

The data collected from the MIT report will, therefore, be of significant use in building AI systems that can have a healthy connection with humans. As time goes by, the techniques adopted by Agrawal and his group will become the industry’s benchmark as the technology advances for AI applications, and the unintended effects of the machine learning progress will be checked.

This article originally appeared in The Mirror