With the spread of artificial intelligence (AI) in different sectors, the issue of responsible AI implementation has become the main one. The business and governmental sectors are facing ethical issues, regulatory compliance, and proactive risk assessment to ensure that AI is used in a transparent and ethical way.

Navigating Responsible AI Implementation

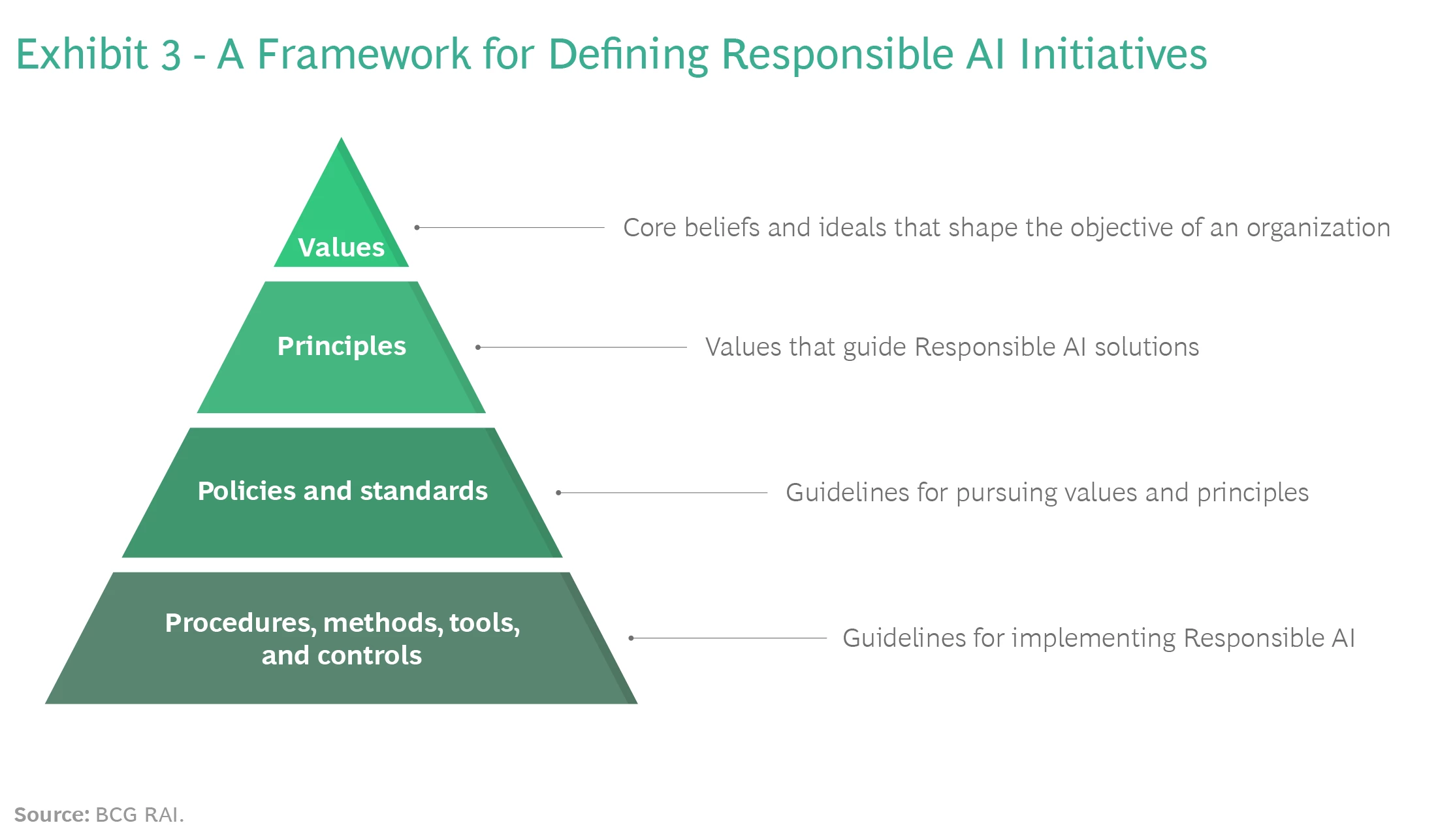

Responsible AI can have different meanings for businesses depending on the industry they are in and how they are using AI. Thus, it is important to define what it means for the business or organization. This means evaluating the risks, following the regulations, and deciding whether the organization is an AI supplier, a customer, or both. For instance, a healthcare organization’s meaning of responsible AI will probably be data privacy and HIPAA compliance.

After the definition is set, the organizations must formulate the principles that will guide the AI development and usage. Transparency is the main factor, encompassing the Public sharing of your AI principles. Recognizing the difficulties you will have to face as you work on your AI systems is the first step to solving them. Through this, the employees will be able to understand the principles and use AI in a responsible way.

Strategies for Enhanced Digital Security

In light of the ever-changing AI-driven threats, organizations have to come up with proactive strategies to enhance digital security. The changing nature of AI-driven threats is what makes them difficult to defend. Besides, it is necessary to know that while AI can be applied to the benefit of the enterprise, others will try to misuse it. Security, IT, and governance teams, and also the whole of the organization, should be ready for AI abuse’s consequences.

One of the successful methods of protecting against AI-driven threats is the continuous skilling and training of the employees so that they can know and report new security threats. For example, the phishing simulation tests can be adjusted in case AI-generated phishing emails are sophisticated so that employees can be more vigilant. Besides, AI-driven detection mechanisms contribute to the detection of outliers and possible hazards, thus strengthening cyber security measures.

Anticipating and Mitigating AI-related Failures

With the growing integration of AI into business operations, companies have to think of ways to be ready for and avoid failures related to AI, such as AI-powered data breaches. The AI tools are allowing hackers to create very powerful social engineering attacks. At the moment, it is a good point to start by having a strong base to protect customer data. It also means that the third-party AI model providers don’t use your customers’ data, which adds protection and control.

Moreover, AI can also be of use in crisis management to make it more robust. To start off is, about security crises, for example outages and failures, where AI can find the cause of a problem much quicker. AI can rapidly go through a bunch of data to find the “needle in the haystack” which indicates the origin of the attack or the service that has failed. Besides, it can also come up with the relevant data for you in just a few seconds through conversational prompts.