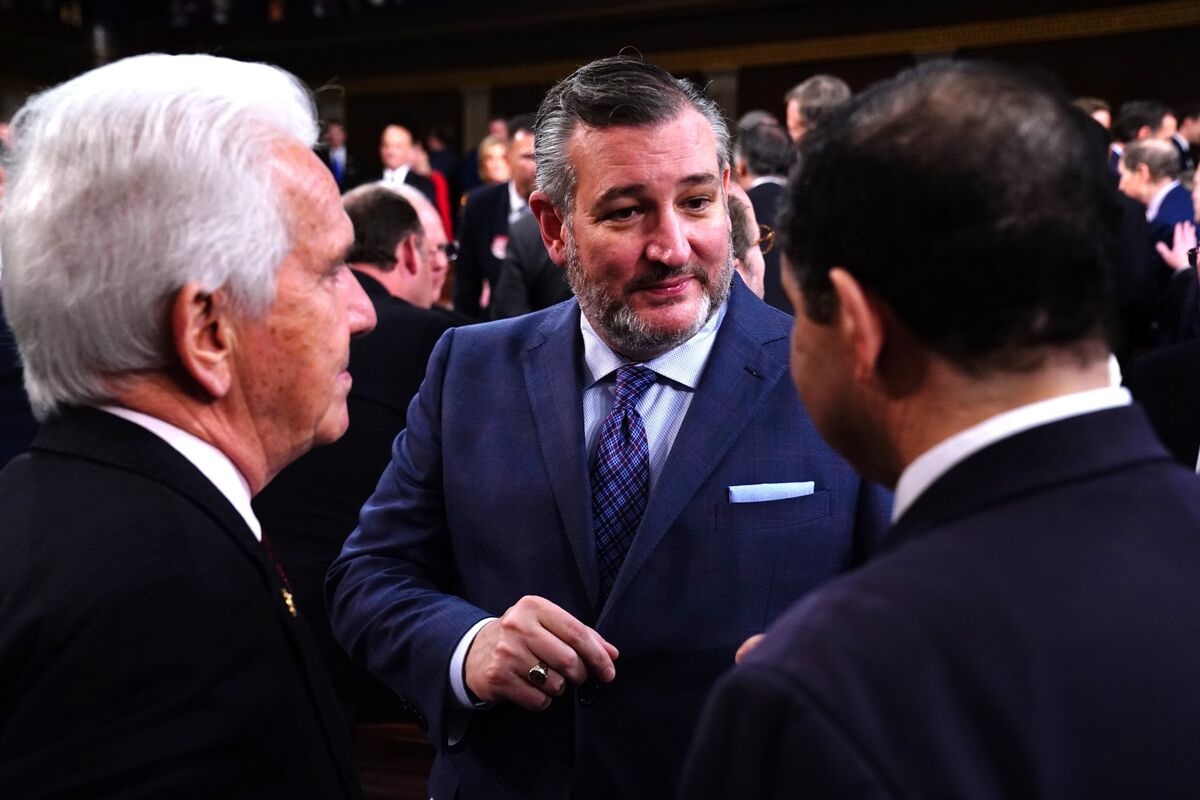

With Congress days away from wrapping up its session, Texas Senator Ted Cruz is making a last-minute push to pass a bill that criminalizes AI-generated revenge porn.

The proposal, S.4569, takes aim at deepfakes — the increasingly popular tech that superimposes someone’s face onto pornographic images or videos without their consent. If passed, the bill would require websites and social media platforms to remove the content within 48 hours of being notified by a victim.

The bill is also bipartisan, co-sponsored by Democratic Senator Amy Klobuchar, and it sailed through the Senate unanimously on December 3. Now, all eyes are on the House, where lawmakers have just a few days left to approve the companion bill, H.R. 8989.

At a December 11 press conference, Cruz said: “Every victim should have the right to say, ‘That is me. That is my image, and you don’t have my permission to put this garbage out there.”

Victims speak out as tech companies back the bill

Elliston Berry, a 15-year-old girl whose AI-altered image spread like wildfire across Snapchat, shared her nightmare at the press event.

“That morning I woke up, it was one of the worst feelings I have ever felt,” she said, describing how the fake image circulated for nine months before Cruz’s office intervened to have it removed. Her family’s countless efforts to get platforms to act had failed.

Berry’s story isn’t unique, and that’s the problem. Deepfake pornography has exploded, leaving victims scrambling to reclaim their privacy while tech companies play catch-up.

For Berry’s mother, reaching Cruz’s office was a turning point. It took direct intervention from a U.S. Senator to get a major social platform to pull the content. For most victims, that kind of access isn’t an option.

Tech giants Google, Meta, Microsoft, and TikTok are backing Cruz’s legislation. This might seem surprising, but the reality is they’re under mounting pressure to step up. Critics have long called out platforms for dragging their feet on removing deepfake content. Cruz’s bill forces their hand: 48 hours, no excuses.

Senator Klobuchar emphasized the need to act now. “This work is about building a future, about taking on the challenges of the new environment we live in and not just pretending it is not happening anymore.”

A problem no one can ignore

AI has made deepfake creation disturbingly simple. Tools that once required advanced skills and resources are now available to anyone with a laptop and internet access. It’s no coincidence that 98% of all deepfake content online is pornographic.

The numbers are shocking. This year, the National Center for Missing and Exploited Children (NCMEC) flagged 4,700 cases of AI-generated child sexual abuse material (CSAM).

The technology also feeds the darkest corners of the internet. A recent case in Wisconsin saw a 42-year-old man arrested for creating and distributing thousands of explicit AI images of minors. Another case involved a child psychiatrist sentenced to 40 years for using AI to generate altered images of real children.

Deepfake pornography doesn’t stop with minors. Celebrities, influencers, and everyday people are being targeted at alarming rates. Anyone with a public image — or even a social media account — can become a victim. With no federal laws in place, victims have little recourse.

AI is only getting smarter. If lawmakers fail to act now, the damage will only deepen. The lawmakers clearly know this. And so do the victims. Now it’s up to Congress to decide whether to fight back or let the deepfake crisis rage on unchecked.

A Step-By-Step System To Launching Your Web3 Career and Landing High-Paying Crypto Jobs in 90 Days.