Researchers from the MRC Brain Network Dynamics Unit and Oxford University’s Department of Computer Science have unveiled a groundbreaking principle that sheds light on how the human brain adapts and adjusts connections between neurons during learning.

This discovery not only enhances our understanding of learning within brain networks but also holds the potential to inspire the development of faster and more robust learning algorithms in artificial intelligence (AI).

Brain learning principle: Prospective configuration

The essence of learning lies in identifying which components in the information-processing pipeline are responsible for errors in output. In AI, this is accomplished through backpropagation, where a model’s parameters are adjusted to minimize output errors.

It has been widely believed that the human brain employs a similar learning principle. However, the biological brain outperforms current machine learning systems in several aspects.

For instance, humans can learn new information after encountering it just once, while artificial systems often require hundreds of repetitions with the same data to grasp it. Moreover, humans can acquire new knowledge while retaining existing information, whereas introducing new data into artificial neural networks can interfere with and degrade existing knowledge.

These observations prompted the researchers to seek the fundamental principle that governs the brain’s learning process. They examined existing sets of mathematical equations describing changes in neuronal behavior and synaptic connections and conducted thorough analyses and simulations. What they discovered challenged the prevailing wisdom.

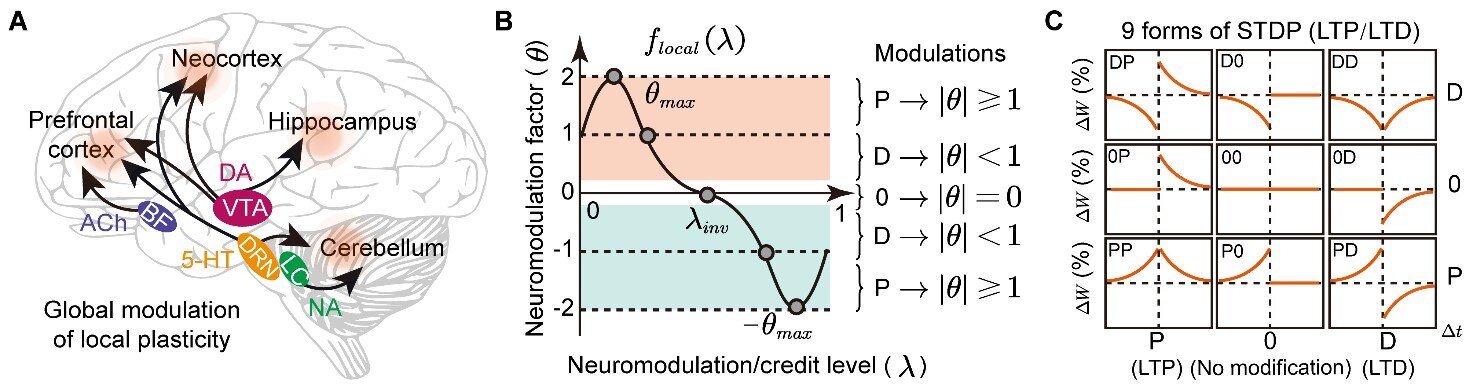

In artificial neural networks, external algorithms aim to modify synaptic connections to minimize errors, but the researchers propose that the human brain first settles the activity of neurons into an optimal balanced configuration before adjusting synaptic connections.

This unique approach, termed “prospective configuration,” is believed to be an efficient feature of human learning, reducing interference and preserving existing knowledge, thereby accelerating the learning process.

Simulation and validation

The researchers backed their findings with computer simulations, demonstrating that models employing prospective configuration outperformed artificial neural networks in tasks commonly encountered by animals and humans in natural settings.

To illustrate the concept, the researchers used the example of a bear fishing for salmon. In an artificial neural network, if the bear loses its ability to hear the river (due to a damaged ear), it would also lose the ability to smell the salmon, leading to the incorrect conclusion that there are no salmon in the river.

However, in the animal brain, the absence of sound does not interfere with the knowledge that the smell of salmon is still present, allowing the bear to continue its quest successfully.

Bridging the gap

Lead researcher Professor Rafal Bogacz, from the MRC Brain Network Dynamics Unit and Oxford’s Nuffield Department of Clinical Neurosciences, emphasized the need to bridge the gap between abstract models and our understanding of the brain’s anatomy. Future research aims to unravel how the algorithm of prospective configuration is implemented in anatomically identified cortical networks.

Dr. Yuhang Song, the study’s first author, highlighted the challenges of implementing prospective configuration in existing computers due to fundamental differences between computer systems and the biological brain. He called for developing a new computer or dedicated brain-inspired hardware capable of rapid and energy-efficient implementation of prospective configuration.