A new type of attack on self-driving car systems has been identified that forces AI to ignore traffic signs on road sides. The technology requires that the car have camera-based computer vision, as it is a primary requirement for the perception of autonomous vehicles, and the attack involves exploiting the rolling shutter of the camera with a light-emitting diode to mislead the vehicle’s AI system.

Self-driving systems can be at risk

Rapidly changing light emitting from fast flashing diodes can be used to change the color perception because of the way the CMOS cameras work, and they are the most used cameras on cars.

This is the same effect that humans feel when a light is rapidly flashed at their eyes and color visibility changes for a few seconds.

Camera sensor types often come as charge coupled devices (CCDs) or complementary metal oxide semiconductors (CMOS). The first type captures the entire picture frame as it exposes all pixels at once, but CMOS is a different game as it uses an electronic shutter that captures the image line by line. Take a traditional home computer printer as an example that prints in lines to form an image.

But the drawback is that the CMOS picture lines are captured at different times to form a frame, so rapid input of changing light can distort the image by producing different color shades in the sensor.

But the reason for their vast adoption in all types of cameras, including those on vehicles, is that they are less costly and provide a good balance between image quality and cost. Tesla and some other vehicle makers also use CMOS cameras in their vehicles.

Study findings

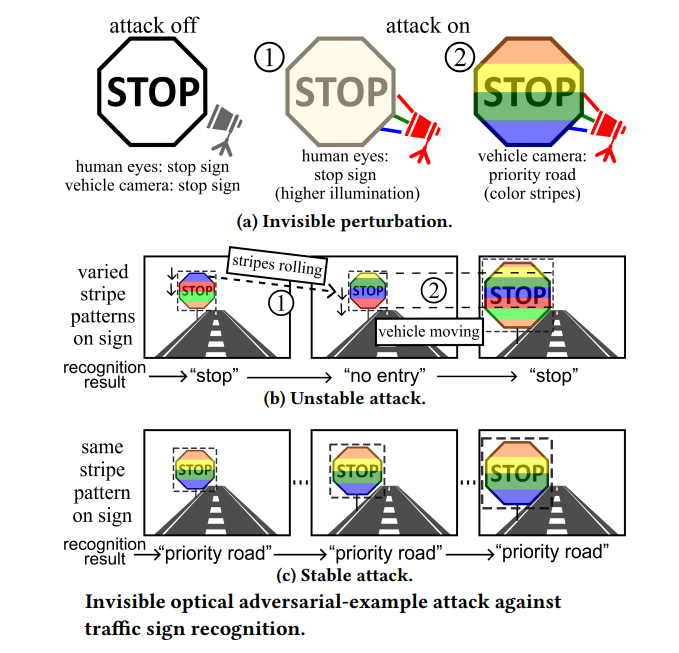

In a recent study, researchers identified the above-described process as a potential risk factor for self-driving cars, as attackers can control the input light source to produce different colored stripes on the captured image to misguide the interpretation of the computer vision system for the image.

Researchers created a flickering light ambient by using light-emitting diodes (LEDs) and tried to mislead the image classification in the under-attack area, and a camera lens created colored stripes that disrupted object detection when a laser was fired in the lens.

While previous studies were limited to single-frame tests and researchers did not go to the lengths of creating a sequence of frames to simulate a continuous stable attack in a controlled environment, the current study was aimed at simulating a stable attack that showed server security implications for autonomous vehicles.

An LED was fired in close proximity to a traffic sign, which projected a controlled, fluctuating light on the sign. The fluctuating frequency is not visible to the human eye, so it is invisible to us, and the LED seems like a harmless lighting device. But on the camera, the result was quite different as it introduced colored stripes to misjudge traffic sign recognition.

But for the attack to completely misguide the self-driving system and make wrong decisions, the results should be the same across a number of consecutive frames because if the attack is not stable, the system may identify the malfunctions and put the vehicle in fail-safe mode, like switching to manual driving.