Thea Ramirez, a former social worker, developed an artificial intelligence-powered tool called Family-Match to help social service agencies find suitable adoptive parents for some of the nation’s most vulnerable children. These children often have complex needs, and disabilities or have experienced significant trauma. Child protective services agencies have grappled with the challenge of finding permanent homes for these children for years. Ramirez claimed that her algorithm, designed by former researchers from an online dating service, could revolutionize adoption matching, but an Associated Press investigation revealed significant limitations and challenges.

The promise of family-match

Ramirez introduced Family-Match as a technological solution that could predict the long-term success of adoptive placements. She argued it used science rather than mere preferences to establish a predictive score for potential adoptive families. The algorithm aimed to improve adoption success rates across the United States and enhance the efficiency of cash-strapped child welfare agencies.

Limited results and challenges

Despite its promising premise, Family-Match’s performance has fallen short of expectations in the states where it has been used. According to self-reported data from Family-Match obtained by AP through public records requests, the AI tool has produced limited results.

Virginia and Georgia’s experience

Virginia and Georgia initially adopted the algorithm but later dropped it after trial runs, citing its inability to produce successful adoptions. Despite this, both states resumed working with Ramirez’s nonprofit, Adoption-Share, after some time.

Tennessee’s struggles

Tennessee encountered difficulties in implementing the program and ultimately scrapped it, citing incompatibility with their internal systems, even after spending over two years on the project.

Mixed experiences in Florida

In Florida, where Family-Match has expanded its use, social workers reported mixed experiences with the algorithm. While it claimed credit for numerous placements, questions arose about the accuracy of these claims.

Lack of transparency and data ownership

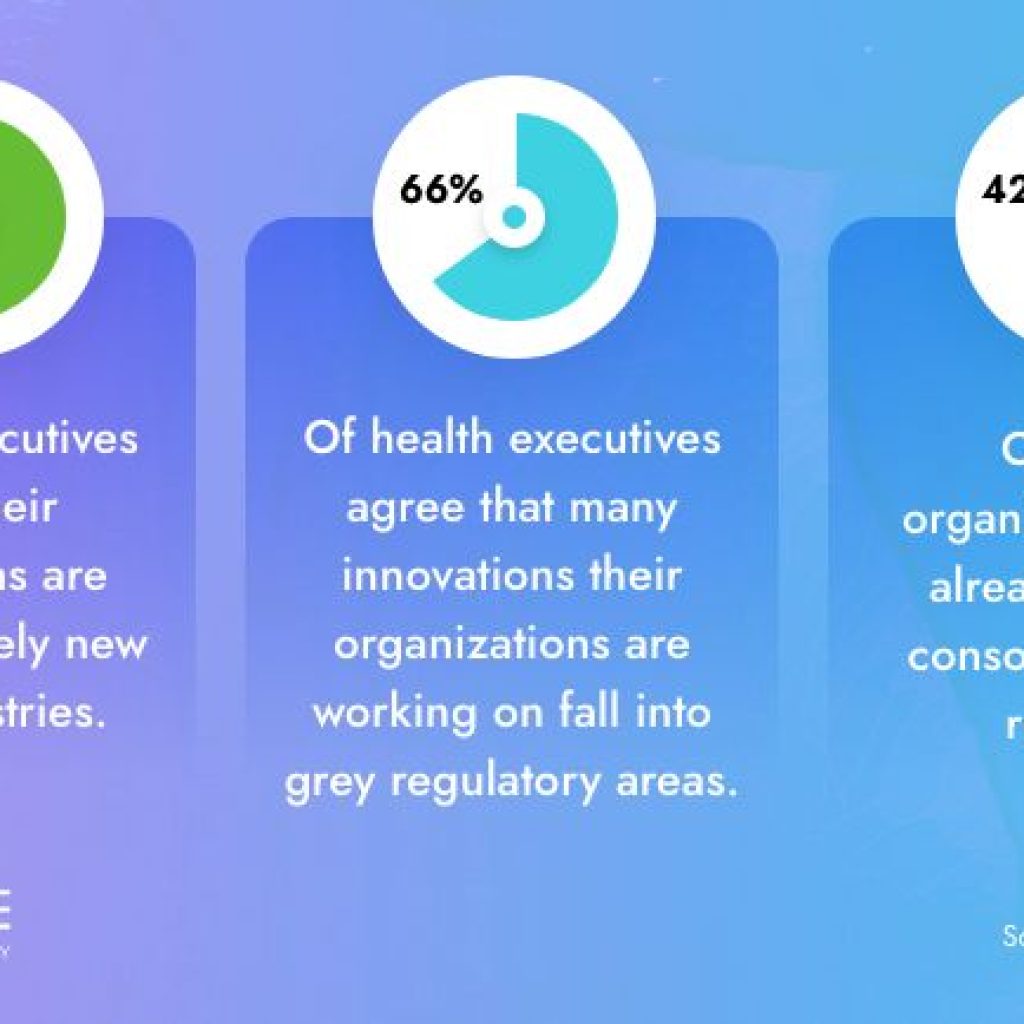

State officials expressed concerns about Family-Match’s lack of transparency regarding its algorithm’s inner workings. Additionally, the organization owned some of the sensitive data collected by Family-Match, raising privacy and data security issues.

Unpredictability of human behavior

Experts in child welfare emphasized that predicting human behavior, especially when it comes to adolescents with complex needs, is inherently challenging. Bonni Goodwin, a child welfare data expert, stressed that there is no foolproof way to predict human behavior.

Ramirez’s background and motivation

Thea Ramirez’s background as a former social worker and her desire to promote adoption as a means to reduce abortions played a significant role in her development of Family-Match. Ramirez previously launched a website to connect pregnant women with potential adoptive parents, emphasizing anti-abortion counseling centers. However, she clarified that Family-Match is not associated with such centers.

Collaboration with eharmony researchers

Ramirez collaborated with Gian Gonzaga, a research scientist who had managed algorithms at eharmony, to create the adoption matchmaking tool. Gonzaga and his wife, Heather Setrakian, worked on developing the Family-Match model, which was inspired by eharmony’s expertise in matchmaking.

State-by-state experiences with Family-Match

Social workers explained how Family-Match operates: Adults seeking to adopt submit survey responses through the algorithm’s online platform, while foster parents or social workers input each child’s information. The algorithm then generates a score for the “relational fit,” displaying a list of prospective parents for each child. Social workers vet the candidates, and in the best-case scenario, a child is matched and placed in a home for a trial stay.

Virginia’s two-year test of Family Match yielded only one known adoption, and local staff reported that they did not find the tool particularly useful.

Georgia ended its initial pilot of Family-Match due to its ineffectiveness, but later resumed using it.

In Florida, where the program expanded, various child welfare agencies gave mixed reviews of Family-Match. It was challenging to assess its success due to discrepancies in the reported data.

State officials expressed concerns about how Family-Match scored families based on sensitive variables and questioned the necessity of certain data points. Some versions of the algorithm’s questionnaire included inquiries about household income and religious beliefs.

Social welfare advocates and data security experts have raised concerns about the increasing reliance on predictive analytics by government agencies, as these tools can perpetuate racial disparities and potentially discriminate against families based on unchangeable characteristics.

Expansion Efforts

Despite these challenges, Adoption-Share seeks expansion opportunities, aiming to implement Family-Match in places like New York City, Delaware, and Missouri. It recently secured a deal with the Florida Department of Health to build an algorithm to increase the pool of families willing to foster and adopt medically complex children.

The adoption matching AI tool, Family-Match, developed by Thea Ramirez, initially held promise as a solution to finding suitable adoptive parents for vulnerable foster children. However, its performance has faced limitations and challenges, with various states experiencing mixed results. Questions about data privacy, transparency, and the algorithm’s accuracy have raised concerns among child welfare experts and advocates. Despite these challenges, Adoption-Share continues its efforts to expand the tool’s usage across the United States.