In recent years, there has been a significant surge in the development and usage of generative AI tools that can replicate people’s voices and create convincing video clones of individuals. These AI technologies have the capability to generate new content, including text, images, videos, and audio, by drawing upon existing data they have been trained on. This rapid evolution in generative AI has raised both excitement and concerns.

How generative AI videos operate

One of the remarkable features of generative AI is its ability to create lifelike video “clones” of individuals using just a two-minute real recording of their voice. Once created, these clones can be directed to say virtually anything by inputting a script, maintaining the original person’s accent, pitch, and tone. Some advanced tools even offer automatic translation capabilities, allowing the clone’s voice to be reproduced in multiple languages while preserving its authenticity. Additionally, AI video platforms enable users to access extensive libraries of stock images and footage for creating new content.

AI’s dual role: Creativity and manipulation

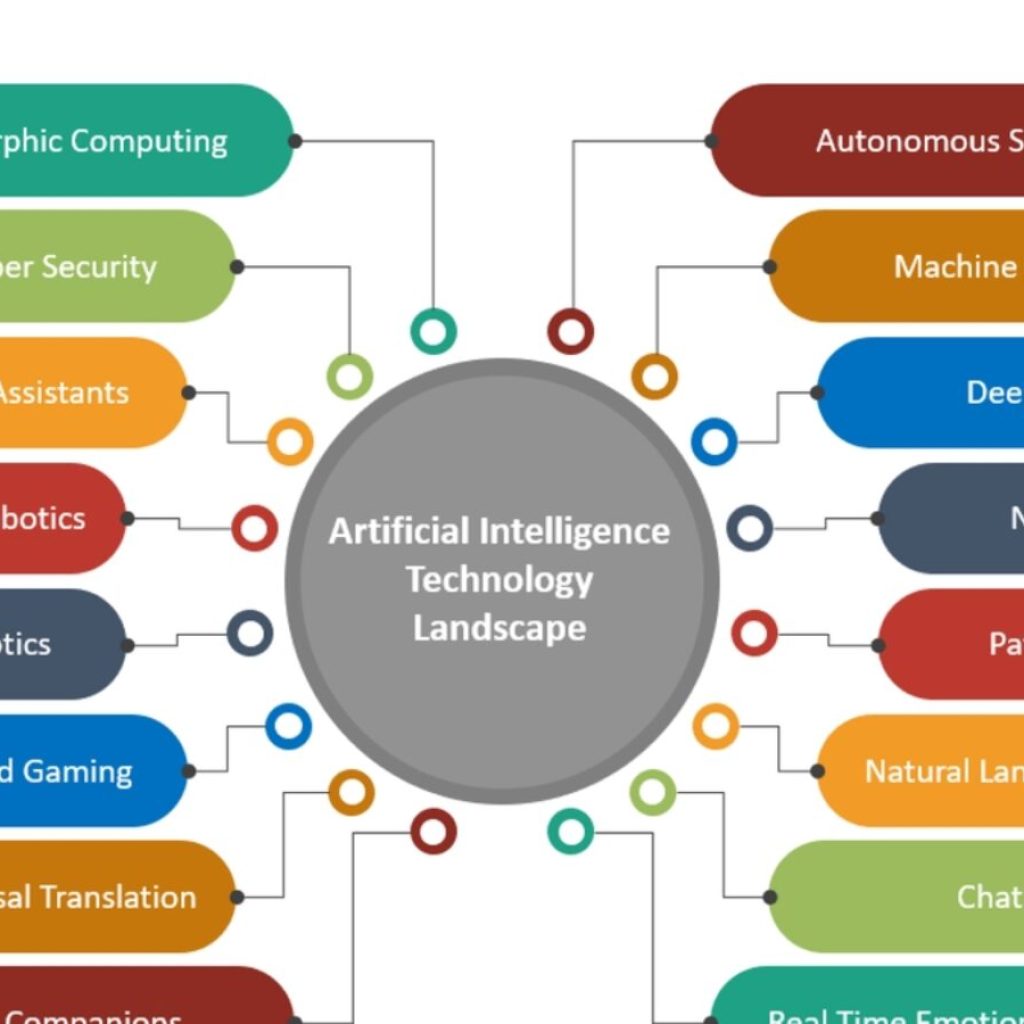

Generative AI has found applications across various fields, such as marketing, sales, education, translation, and news publishing, enhancing the creative process and saving time. However, the same technology has a darker side, as it can be exploited for deceptive purposes. Misinformation experts are increasingly concerned about the potential misuse of readily available AI tools, some of which are freely accessible online.

The threat of AI-driven misinformation

There is growing apprehension that malicious actors could harness these tools to disseminate falsehoods, deceive, or scam individuals. Instances of AI-generated voices and videos being used for misinformation and manipulation have already emerged. For example, an AI-generated voice was added to authentic footage of Prime Minister Anthony Albanese, falsely promoting a financial scam on social media. Similarly, videos of Treasurer Jim Chalmers and other public figures were manipulated using AI to deceive the public.

AI in news broadcasting and beyond

The impact of generative AI extends to news broadcasting as well. A start-up global news channel named Channel 1 is set to launch in 2024, featuring AI hosts that closely resemble real people. These AI avatars will deliver news generated by human journalists but presented by AI entities. This innovation raises questions about the authenticity and transparency of news delivery.

The call for collective action

Addressing the challenges posed by AI-driven misinformation requires a collaborative approach. Experts emphasize the need for greater information transparency and literacy among the public. In the face of AI-generated content, individuals are urged to exercise skepticism and ask critical questions about the sources and authenticity of the information they encounter. The responsibility of distinguishing fact from fiction ultimately falls on the shoulders of both content consumers and creators.

AI’s impact in 2024

The year 2023 marked a pivotal period for AI, with widespread adoption of generative AI tools and platforms. OpenAI’s ChatGPT, for instance, reached millions of users in its first two months, revolutionizing the way people interact with AI. While many have explored these technologies in various professional settings, a substantial percentage has remained discreet about their usage.

In 2024, AI is expected to further integrate into everyday life and work. AI tools have the potential to improve marketing, customer service, agricultural processes, and scientific research by offering accurate and reliable information. Environmental projects can benefit from AI by optimizing sustainable technologies. Additionally, AI can enhance access to medical advice for remote communities, contributing to global well-being.

Ensuring responsible AI usage

As AI becomes increasingly embedded in society, the importance of trust and ethical usage cannot be overstated. To reap the benefits of AI, it is crucial to establish robust standards and regulations. Ensuring the accuracy, fairness, and unbiased nature of AI-generated content is paramount. Individuals and organizations must also be mindful of their responsibilities when using AI, as existing legal systems still apply.

The rise of generative AI brings both promise and challenges. While these technologies offer remarkable opportunities for creativity and efficiency, they also pose a significant risk when misused. The year 2024 marks a crucial moment in shaping the responsible and ethical use of AI in various aspects of life and work. It calls for a collective effort to harness the potential of AI for the benefit of society while safeguarding against misinformation and manipulation.