In an era dominated by the digital realm, the pervasive influence of Artificial Intelligence (AI) has taken a disconcerting turn, casting a shadow over the reliability of online information. The ominous rise of AI-generated images has become a breeding ground for misinformation, causing a seismic shift in public trust.

Fact-checking stalwart Full Fact raises the alarm, shedding light on the challenges posed by misleading images saturating the online sphere and questioning the efficacy of existing regulatory measures. At the heart of this concern is the urgent need for bolstered media literacy funding, a rallying cry against the tide of AI-fueled deception.

The surge in deceptive visuals is emblematic of a broader crisis, one that transcends the mere sharing of misinformation. Full Fact’s chief executive, Chris Morris, points to a landscape where AI tools, once confined to the realm of experts, are now accessible to anyone with an internet connection.

The consequences are dire—fake AI images, such as the infamous viral depiction of Pope Francis donning a puffer jacket, circulate unabated, leaving thousands unwittingly complicit in the dissemination of false information. In the wake of such incidents, Full Fact contends that the responsibility to combat this growing problem cannot rest solely on news outlets and fact-checkers.

The deceptive mirage unveiled

Full Fact’s fact-checking endeavors illuminate a series of disconcerting incidents, exemplifying the corrosive impact of AI-generated images on public trust. The proliferation of fake mugshots featuring former US president Donald Trump and an audacious portrayal of Pope Francis sporting a puffer jacket stands out as glaring instances where users fell victim to deceptive content. The collateral damage extends to manipulated photographs of prominent figures like the Duke of Sussex and Prince of Wales, circulated widely on social media platforms.

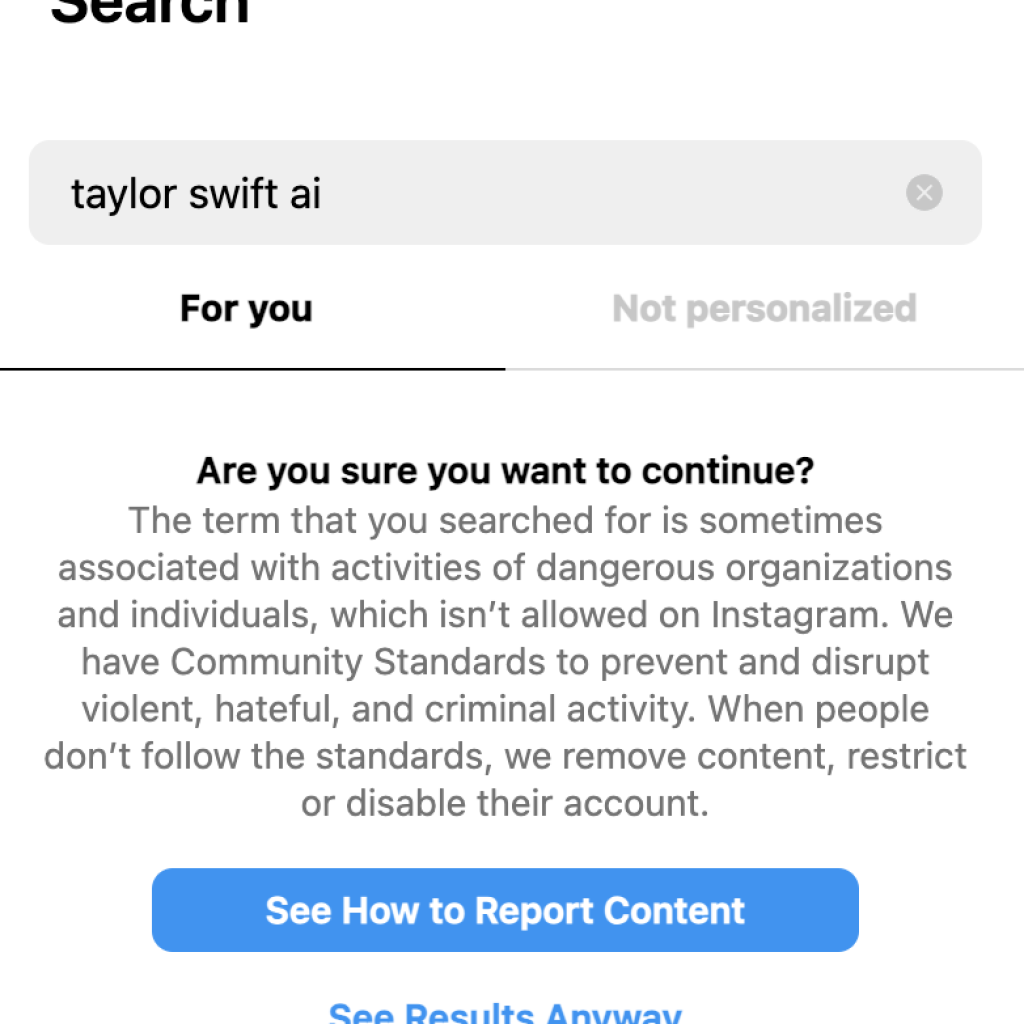

These incidents, while seemingly disparate, form a pattern that Full Fact suggests aims not merely at convincing individuals of a particular claim but at eroding trust in information as a whole. The charity contends that the deluge of low-quality, manipulated content threatens to inundate search results, thereby diminishing the availability of authentic information online. As AI applications evolve at an unprecedented pace, rendering powerful image manipulation tools readily accessible, the need for a proactive response becomes increasingly urgent.

The call for media literacy funding

Chris Morris, Full Fact’s chief executive, emphasizes the imperative for swift government action in addressing the escalating threat of AI-generated misinformation. The crux of the matter lies in the accessibility of AI imaging tools to the general public, creating an environment where misinformation proliferates without adequate checks and balances. Morris underscores the futility of relying solely on news outlets and fact-checkers, urging a robust commitment to media literacy funding to equip individuals with the skills to discern genuine from manipulated content.

The Online Safety Act, touted as a bulwark against online harms, comes under scrutiny for its perceived inadequacy in addressing the nuanced challenges posed by AI-generated content. Morris contends that the current legislative framework fails to cover the foreseeable harms emanating from the manipulation of content using AI tools. Without a substantial increase in resources dedicated to improving media literacy, Morris warns that the information environment online will become increasingly treacherous, jeopardizing public trust and, consequently, the very fabric of democracy.

AI-generated images and the imperative for digital vigilance

As the landscape of online information undergoes a transformative shift propelled by AI, the fundamental question looms large: Can society navigate this evolving digital terrain with resilience and discernment? The urgency of bolstering media literacy funding and enhancing regulatory measures resonates as a clarion call against the encroaching tide of AI-generated deception.

In a world where the veracity of what we see online is increasingly called into question, the responsibility to fortify our defenses against misinformation becomes paramount. How can we collectively ensure that the rise of AI does not become synonymous with the erosion of trust, especially in critical junctures like elections? The answer lies in our commitment to fostering a digital landscape where transparency and media literacy reign supreme.