Quantstamp's Richard Ma explained that the coming surge in “sophisticated” AI phishing scams could pose an existential threat to crypto organizations.

With the field of artificial intelligence evolving at near breakneck speed, scammers now have access to tools that can help them execute highly sophisticated attacks en masse, warns the co-founder of Web3 security firm Quantstamp.

Speaking to Cointelegraph at Korea Blockchain Week, Quantstamp's Richard Ma explained that while social engineering attacks have been around for some time, AI is helping hackers become “a lot more convincing” and increase the success rate of their attacks.

To illustrate what the new generation of AI-powered attacks look like, Ma recalled what happened to one of Quantstamp’s clients, where an attacker pretended to be the CTO of the targeted firm.

“He began messaging one of the other engineers in the company, saying ‘hey, we have this emergency, here's what's going on’ and engaging them in a bunch of conversations before asking them for anything,” said Ma.

Apparently there’s an increase in person specific targeted scams, rather than just playing numbers game… using AI and ChatGPT scammers can write code fast, and new tech means it’s more profitable for attackers until software is created to fight back.

— MoistenedTart.blsky.social (@MoistenedTart) August 31, 2023

The elderly are the targets

Ma said these added steps add a layer of complexity to attacks that make the possibility of someone handing over important information a lot more likely.

“Before AI, [scammers] might just ask you for a gift card or to pay them Bitcoin because it's an emergency. Now they do a lot more extra steps in the conversation beforehand to establish legitimacy."

Ultimately, Ma said the most existential threat introduced by sophisticated AI is the sheer scale at which these types of attacks can be executed.

By leveraging automated AI systems, attackers could be spinning up social engineering attacks and other advanced scams across thousands of different organizations with very little in the way of human involvement.

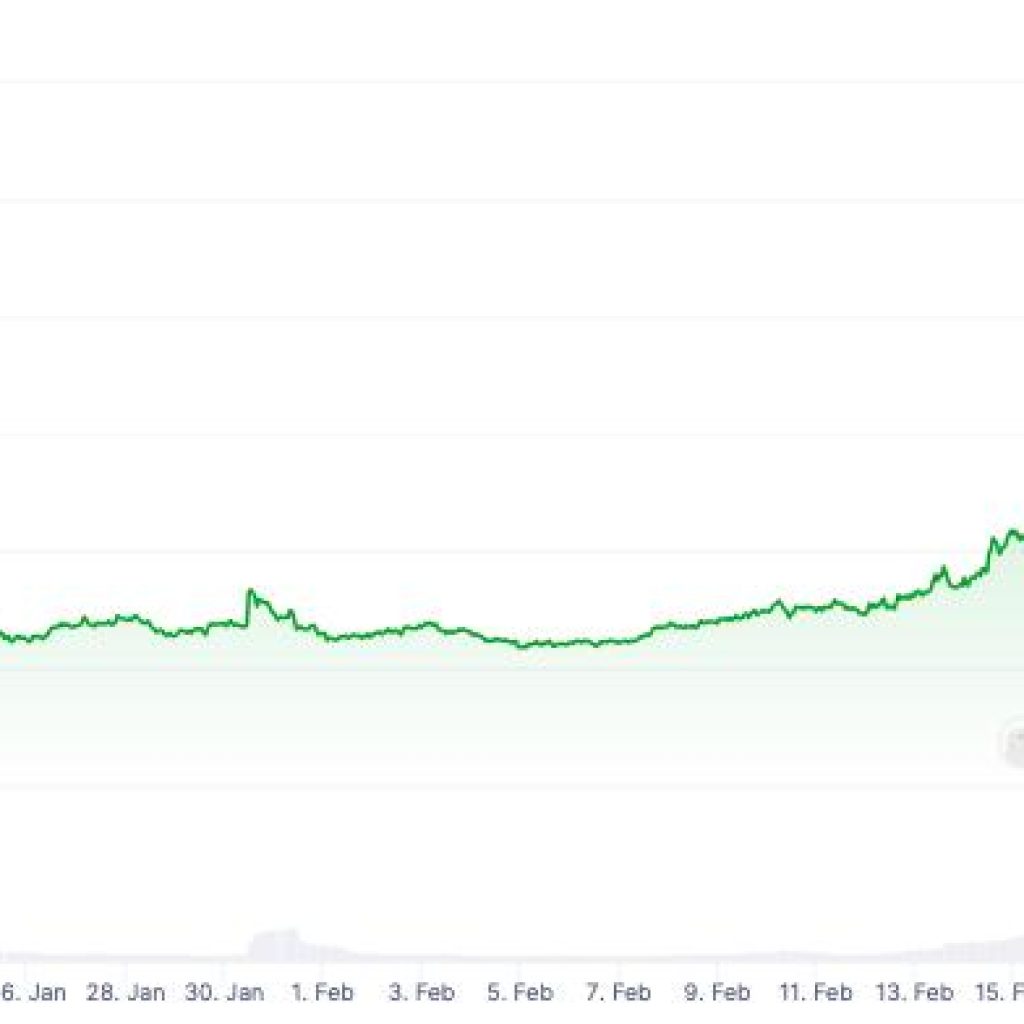

How are AI Chat and ChatGPT scams affecting the markets?

— Cointelegraph (@Cointelegraph) February 27, 2023

Find out from The Market Report hosts Sam Bourgi (@forgeforth_) and Marcel Pechman (@noshitcoins) on our latest episode: https://t.co/wrfGQC0aO4 pic.twitter.com/kHH6hcisd9

“In crypto, there's a lot of databases with all the contact information for the key people from each project. Once the hackers have access to that, they can have an AI that messages all of these people in different ways,” he said.

“It's pretty hard to train your whole company to be able to not respond to those things.”

While the scale and complexity of AI-powered scams may seem intimidating, Ma offered some straightforward advice to individuals and organizations looking to protect themselves.

Related: Crypto scams are going to ramp up with the rise of AI

The most important protective measure is to avoid sending any sensitive information via email or text. Ma said that organizations should move to localize all communication of important data to Slack or other internal channels.

“As a general rule, stick to the company’s internal communication channel and double check everything.”

Finally, Ma said that companies should invest in anti-phishing software that filters automated emails from bots and AI. He said that Quantstamp utilize anti-phishing software from a company called IronScales, which offers email-based security services.

“We're just at the start of this arms race, and it's only going to get harder to distinguish between humans and convincing AI.”

AI Eye: Apple developing pocket AI, deep fake music deal, hypnotizing GPT-4