In today’s digital age, where every click and interaction leaves a digital trace, the intricate relationship between artificial intelligence (AI) and data privacy has become a central topic of discussion. With AI’s rapid growth, we’ve seen a transformation in data handling, offering innovative solutions across various industries. Yet, this progress brings significant privacy concerns, sparking debates over the ethical management of personal data.

The Data Privacy Challenge in the Age of AI

Integrating AI into our daily lives has been revolutionary. AI’s vast capabilities are expanding, from personalized marketing strategies to advanced healthcare diagnostics. However, this growth spurs significant privacy concerns. The core of these concerns lies in how AI algorithms process vast amounts of data, including sensitive personal information. The ability of AI to analyze and predict user behavior, while beneficial in many contexts, also raises questions about surveillance, data misuse, and the erosion of personal privacy. As AI systems become more sophisticated, the line between valuable data analysis and privacy infringement becomes increasingly blurred, necessitating a careful examination of the ethical implications of AI-driven data usage.

The potential for data privacy breaches in AI systems is not just theoretical; several high-profile incidents have brought these issues to the forefront. For instance, the Cambridge Analytica scandal highlighted how personal data could be exploited for political advertising, leveraging AI algorithms to influence voter behavior. Another example is the accidental exposure of personal data through AI-powered virtual assistants, where voice recordings were stored and analyzed without explicit user consent. These incidents underscore the vulnerabilities inherent in AI systems and the need for robust privacy protections to prevent misuse of personal data.

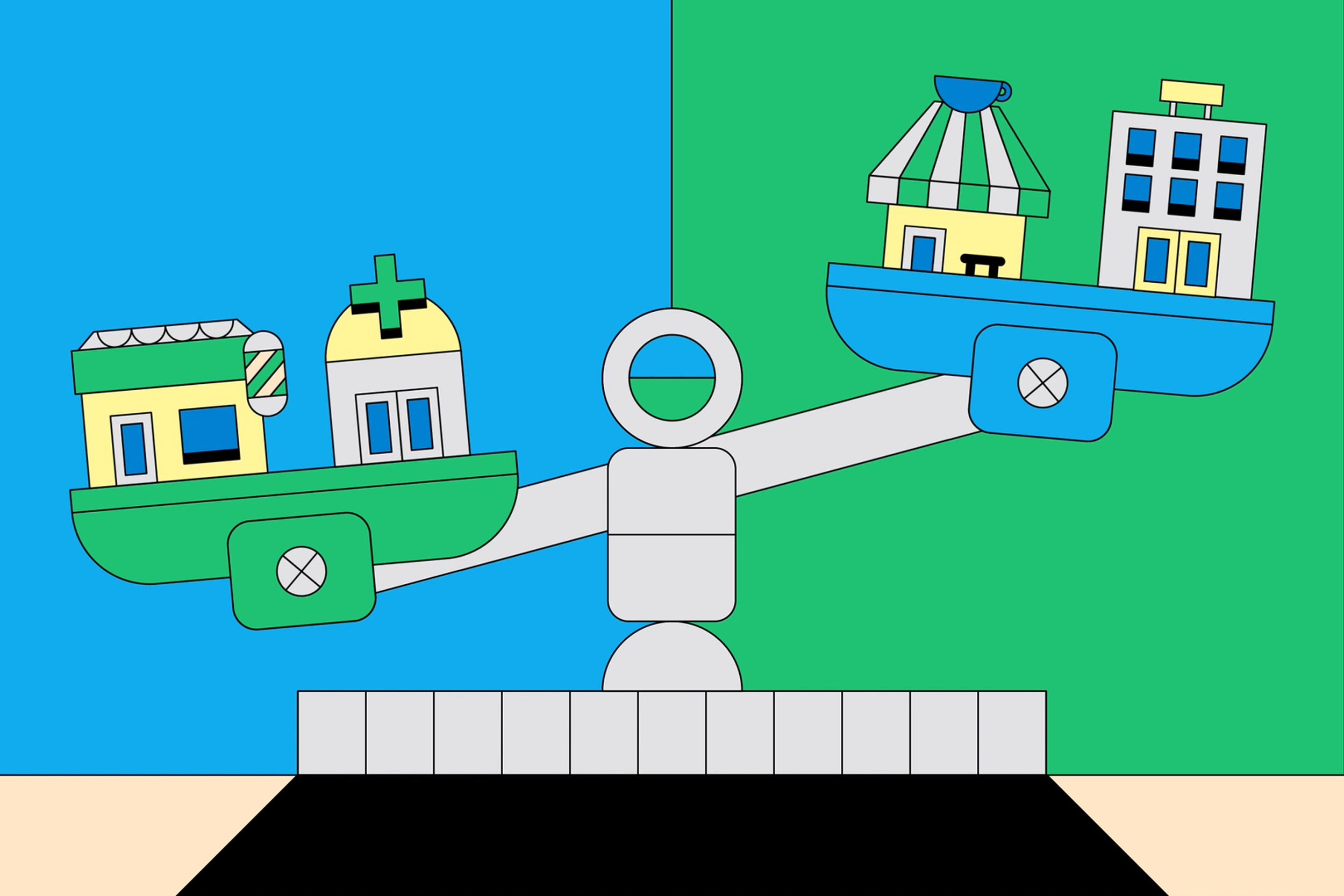

The dilemma facing the tech industry and regulatory bodies is how to foster AI advancement while ensuring individual privacy protection. On one hand, AI’s potential for societal and economic benefits is immense. It can drive innovation, streamline processes, and solve complex problems. On the other hand, there is a pressing need to safeguard personal data and maintain privacy standards. This balancing act requires a nuanced approach that embraces AI’s advantages but also rigorously addresses the privacy concerns it raises. Developing frameworks and guidelines that govern AI use, ensuring transparency in AI operations, and empowering users to control their data are some steps that can help navigate this complex landscape. The goal is to create an environment where AI can thrive without compromising the privacy rights of individuals.

AI’s Double-Edged Sword in Data Privacy

- The Privacy Challenges Posed by AI

AI’s role in intensifying privacy concerns stems from its automated data collection and processing abilities. These systems, designed to amass and analyze extensive data, can significantly enhance user experiences and service efficiency. However, this process often occurs without explicit user consent, raising severe privacy red flags. While efficient, AI systems’ automated nature of data collection brings into question the transparency and control over personal data usage.

AI’s potential to inadvertently propagate biases and misuse personal data is a pressing concern. The accuracy and fairness of AI decisions depend on their training data. Biased training data can lead to AI algorithms making skewed decisions, potentially leading to discriminatory outcomes. Moreover, the vast pools of personal data collected by AI systems can become susceptible to misuse, posing a significant threat to user privacy.

- AI as a Catalyst for Enhancing Data Privacy

Contrary to the challenges, AI also emerges as a potent ally in bolstering data privacy. AI’s prowess in anonymizing personal data is a key asset, effectively obscuring individual identities and safeguarding privacy. Additionally, AI’s evolving encryption technologies offer enhanced protection, securing personal data even in the event of unauthorized access.

AI’s predictive analytics capabilities are invaluable for preemptive privacy risk assessments. AI systems can anticipate and mitigate privacy risks by identifying patterns and potential vulnerabilities in data handling. This proactive stance is crucial for organizations to preempt privacy breaches. Predictive analytics also plays a vital role in ensuring adherence to privacy policies within organizations, offering a systematic approach to monitoring and audit data usage.

Corporate Strategies for AI and Data Privacy

Examples of companies are addressing AI Privacy issues.

- Samsung’s Ban on Generative AI Tools

To address AI privacy concerns, Samsung banned using generative AI tools. This decision responded to data leaks and privacy breaches associated with AI technologies. Samsung’s approach highlights a cautious stance, prioritizing user privacy over the potential benefits of AI tools. This case study exemplifies how companies can take decisive action to mitigate privacy risks, even temporarily halting certain AI technologies.

- Hyperscience’s Approach to AI Governance

Hyperscience, a leading tech company, offers a contrasting approach to AI and privacy. Instead of imposing bans, they focus on developing a robust AI governance program. This program involves collaboration between legal, risk management, and data governance teams to ensure responsible use of AI. Hyperscience’s strategy underscores the importance of internal governance in managing AI’s privacy implications, demonstrating a proactive approach to balancing innovation with privacy protection.

Building Effective AI Governance Programs

An essential component of effective AI governance is cross-functional collaboration; this involves bringing together various departments, such as legal, IT, data security, and compliance, to work in unison. Such collaboration ensures a holistic approach to AI governance, where different perspectives and expertise converge to address privacy concerns comprehensively. It also facilitates the development of technically sound and legally compliant policies.

Ethical and legal considerations form the backbone of AI governance programs. Companies must navigate the complex landscape of AI ethics, ensuring their AI systems do not inadvertently harm users or misuse their data; this involves adhering to fairness, transparency, and accountability principles. Additionally, legal compliance is paramount, with regulations like GDPR and CCPA setting strict standards for data privacy. Companies must ensure their AI deployments align with these regulations, adapting their strategies as legal frameworks evolve.

Technological Innovations in AI for Privacy Preservation

- Tokenization and Anonymization Techniques

Tokenization involves replacing sensitive data elements with non-sensitive equivalents, tokens with no exploitable value. This method is particularly effective in financial services where protecting customer data is paramount. On the other hand, anonymization involves stripping data of personally identifiable information. When applied correctly, it ensures that individual data subjects cannot be identified, even if other data points are cross-referenced. AI enhances these techniques by automating and optimizing the process, ensuring data remains useful for analysis without compromising individual privacy.

- Synthetic Data and Its Role in Privacy

Synthetic data involves generating artificial data sets that closely mimic real-world data but do not contain any actual user information. Synthetic data is particularly beneficial in training AI models where access to large volumes of data is essential, but privacy concerns are paramount. Organizations can use synthetic data to develop and test AI models without risking exposure to sensitive personal data, striking a balance between data utility and privacy.

- Fully Homomorphic Encryption: A Game-Changer for AI Privacy

Fully homomorphic encryption (FHE) represents a significant leap forward in data privacy technology. FHE allows for complex computations on encrypted data without ever needing to decrypt it. AI algorithms can analyze and draw insights from data while it remains encrypted, ensuring the highest data privacy level. The potential of FHE is vast, especially in fields like healthcare and finance, where handling sensitive data is a daily necessity. As this technology matures, it is poised to revolutionize how we approach data privacy in AI, offering a solution that does not compromise data utility and the need to protect personal information.

Balancing Innovation and Privacy: The Middle Road

Advocates for stringent regulations emphasize the necessity of safeguarding personal data against potential AI misuse. Conversely, there’s a growing concern that excessive regulatory measures might hinder the possible advancements AI promises. This ongoing dialogue highlights the need for a balanced regulatory approach that respects the rapid development of AI while maintaining robust privacy protections. It’s a delicate balancing act, seeking to harmonize the pace of technological breakthroughs with the imperatives of data privacy.

Pursuing a middle ground hinges on a commitment to ethical AI development; this means designing AI systems that are not only efficient but also transparent, equitable, and privacy-conscious. Ethical AI encompasses a broad spectrum of practices, from setting clear data usage guidelines and robust security protocols to ensuring unbiased AI algorithms. It also involves inclusive stakeholder engagement, ensuring AI’s trajectory aligns with societal values. This ethical approach is pivotal in marrying the innovative prowess of AI with the fundamental need for privacy.

There are notable examples that have achieved this balance. The European Union’s General Data Protection Regulation (GDPR) is a landmark in regulating personal data usage, influencing global privacy standards in the AI context. Tech giants like Apple demonstrate this equilibrium by embedding privacy-focused features in their AI offerings, such as differential privacy, which enables data utilization without compromising individual privacy. The IEEE’s AI ethics guidelines also offer a blueprint for responsible AI development, underscoring the importance of user data rights and transparency.

The Role of Individuals in AI Data Privacy

- Empowering Users with Knowledge and Control

At the heart of AI data privacy is the empowerment of individuals. Users must understand their data usage and have control over it. This empowerment begins with transparency from companies and developers about their AI systems’ data practices. Users should have easy access to information about what data is collected and who has access to it. Moreover, they should have access to tools to control their data, such as opting in or out of data collection, accessing it, and requesting its deletion. Empowering users in this way enhances privacy and builds trust in AI technologies.

- Advocacy and Accountability in AI Usage

Individuals also play a key role in advocating for responsible AI usage and holding companies accountable; this involves being vigilant about how personal data is handled by AI systems and speaking out against practices that infringe on privacy rights. Users can exercise their power through choices about their products and by supporting companies prioritizing privacy. Additionally, individuals can participate in public discourse about AI and privacy, influencing policy and regulatory decisions. By being active participants, users can help shape the development of AI in a direction that respects and protects privacy.

- The Importance of Public Awareness and Education

Public awareness and education are fundamental in equipping individuals to navigate the AI-driven world. Many users are unaware of the potential privacy implications of AI integration into their daily lives. Educational initiatives and public awareness campaigns can demystify AI and inform the public about their rights and the tools available to protect their privacy. This education should also extend to understanding the biases and limitations of AI, fostering a more informed and critical user base. By increasing public awareness and education, individuals can be better prepared to make informed decisions about their data and hold AI systems to higher privacy standards.

Conclusion

Finding the equilibrium between the advancements in AI and safeguarding data privacy is an intricate yet crucial endeavor that demands a concerted effort from all stakeholders involved. This journey is not just about navigating the challenges posed by AI but also about embracing its potential to enhance privacy protection. The essence of this balance lies in the commitment to ethical AI practices, the establishment of strong governance structures, and the adoption of cutting-edge privacy technologies like tokenization and advanced encryption. Furthermore, individuals’ empowerment and active participation in managing their data privacy are paramount. As we forge ahead in this dynamic digital landscape, our collective aim should be to leverage AI’s revolutionary capabilities while steadfastly protecting individual privacy, paving the way for a future where technological innovation and personal privacy flourish together.