In a world where artificial intelligence permeates our daily lives, its application in the realm of armed conflict raises crucial questions about the future of warfare. The real news on the horizon is the imminent integration of AI-based decision-making platforms in defense operations. From claims of enhanced situational awareness to faster decision-making cycles, these tools promise to revolutionize how wars are waged. Yet, a closer look reveals a complex landscape where the advantages of such systems are met with significant challenges, posing potential risks to civilians and the effectiveness of military operations.

Minimizing risk of harm to civilians in conflict

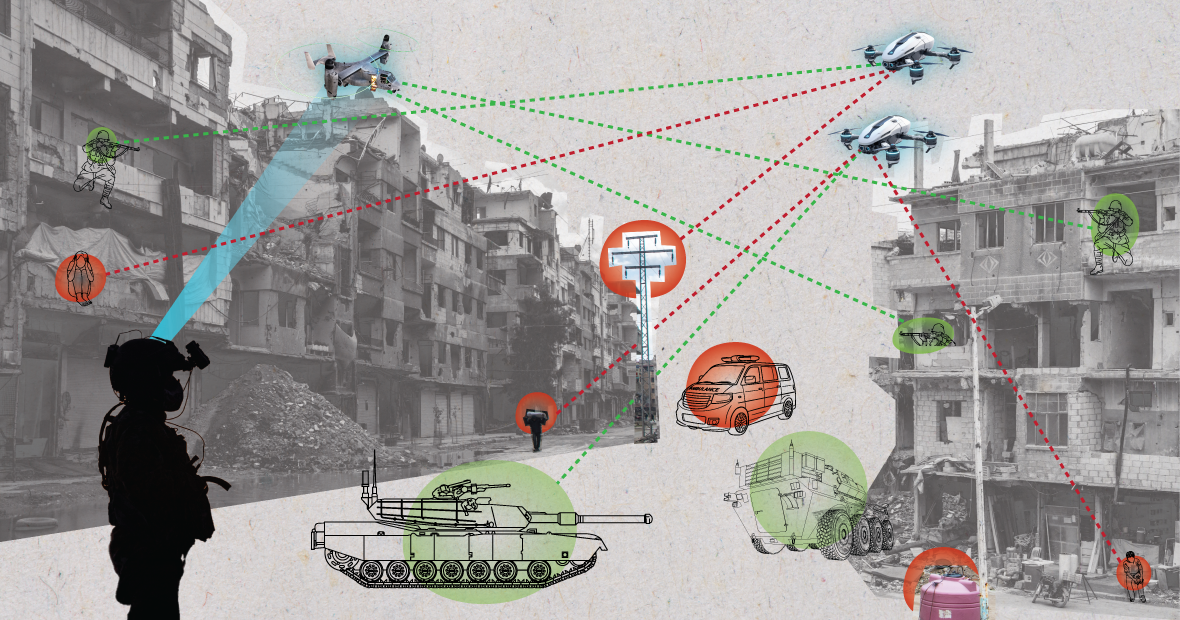

In the pursuit of minimizing harm to civilians in conflict zones, AI-based decision support systems (AI-DSS) emerge as potential game-changers. Advocates argue that these tools can leverage AI’s capabilities to collect and analyze information rapidly, aiding in more informed and, ideally, safer decisions.

The International Committee of the Red Cross (ICRC) suggests that AI-DSS, by utilizing open-source repositories like the internet, could provide commanders with comprehensive data, potentially reducing risks for civilians. Yet, the reliance on AI introduces a critical need for cross-checking outputs from multiple sources, given the system’s susceptibility to biased or inaccurate information.

The allure of AI in warfare is met with the stark reality of system limitations. Recent instances of AI failures, from misidentification based on skin color to fatal consequences in self-driving cars, underscore the challenges. These systems, prone to biases and vulnerabilities, can be manipulated, leading to potentially catastrophic outcomes. As AI takes on more complex tasks, the likelihood of compounding errors rises, with the interconnected nature of algorithms making it difficult to trace and rectify inaccuracies. The unpredictable behavior of AI, as seen in the GPT-4 study, raises concerns about its reliability in critical decision-making scenarios.

While AI-DSS doesn’t make decisions per se, its influence on human decision-makers introduces a set of challenges. Automation bias, where humans tend to accept system outputs without critical scrutiny, poses a significant risk. The example of the US Patriot system firing at friendly aircraft due to misplaced trust in software highlights the potential consequences of this bias. In the context of armed conflict, this dynamic may lead to unintended escalations and heightened risks for civilians. The intersection of AI with human cognitive tendencies demands a delicate balance, urging a reassessment of how humans interact with AI in decision-making processes.

Balancing speed and precision

One of the touted advantages of AI in warfare is the increase in decision-making tempo, providing a strategic edge over adversaries. Yet, this acceleration introduces challenges in maintaining precision and minimizing risks to civilians. The concept of ‘tactical patience,’ slowing down decision-making processes, becomes crucial in ensuring thorough assessments and informed choices at every stage.

The notorious Kabul drone strike in 2021 stands as a poignant example of the dire consequences that arise from the reduced time for decision-making. The lack of the “luxury of time” hindered the development of a comprehensive ‘pattern of life’ analysis, contributing tragically to civilian casualties. Slowing the tempo, as advocated in military doctrine, enables users to gather more information, understand the complexity of situations, and develop a wider array of options. This extra time becomes invaluable in preventing unintended harm to civilians and ensures a more deliberate and comprehensive decision-making process.

Ethical crossroads of AI-based decision making in warfare

In the chaos of war, the role of AI demands a human-centered approach that prioritizes the well-being of those affected and underscores the responsibilities of human decision-makers. As the integration of AI in armed conflict unfolds, it becomes imperative to balance the potential benefits with the known challenges.

The assertions of greater civilian protection through AI-DSS must be critically examined in light of system limitations, human-machine interaction nuances, and the implications of increased operational tempo. In shaping the future of warfare, can a harmonious coexistence between AI and human decision-makers truly be achieved, or does the pursuit of technological advantage come at the cost of humanity?