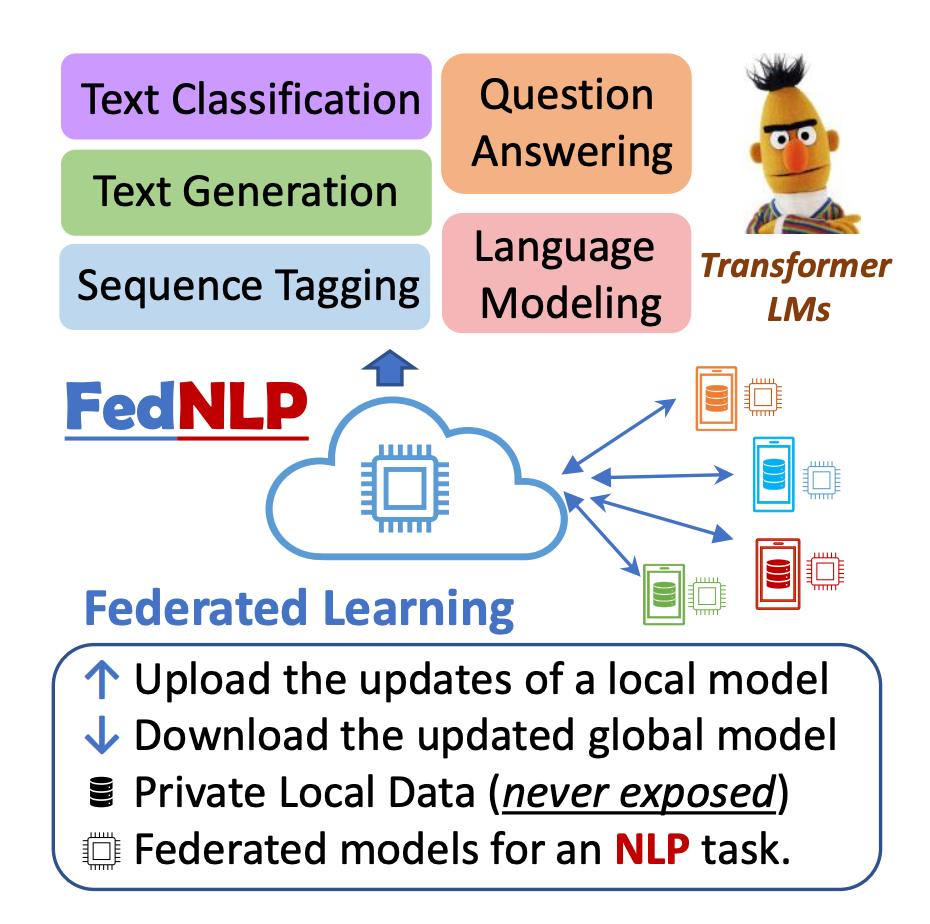

In a groundbreaking development for the field of Large Language Models (LLMs), Federated Learning (FL) emerges as a transformative force, poised to reshape industries by combining the power of advanced linguistic capabilities with decentralized model training.

Large language models have revolutionized tasks requiring natural language understanding, from healthcare to finance, by analyzing vast datasets and generating human-like responses. However, their effectiveness often hinges on fine-tuning, demanding significant computational resources, and facing limitations in acquiring domain-specific data. Striking a balance between the need for fine-tuning and resource constraints becomes crucial for organizations leveraging LLMs in their workflows.

Why federated learning is a game-changer

Privacy Preservation and Enhanced Security: FL safeguards user privacy by sending only model updates, not raw data, to a central server. This decentralized method aligns with privacy regulations, minimizing the risk of data breaches and ensuring data security.

Scalability and Cost-Effectiveness: FL’s decentralized training spreads the computational workload, enhancing scalability and bringing substantial cost savings. This democratizes access to the benefits of LLMs, particularly advantageous for organizations with limited resources.

Continuous Improvement and Adaptability: FL addresses the challenge of continuously expanding datasets by seamlessly integrating newly collected data into existing models. This ensures continuous improvement and adaptability to changing environments, crucial in dynamic sectors like healthcare and finance.

Improved User Experience with Local Deployment: FL addresses privacy and scalability concerns, significantly improving the user experience. By deploying models directly to edge devices, FL speeds up responses, minimizing latency and ensuring quick answers for users. This is particularly relevant in applications where immediate responses are critical.

Challenges of federated learning in large language models

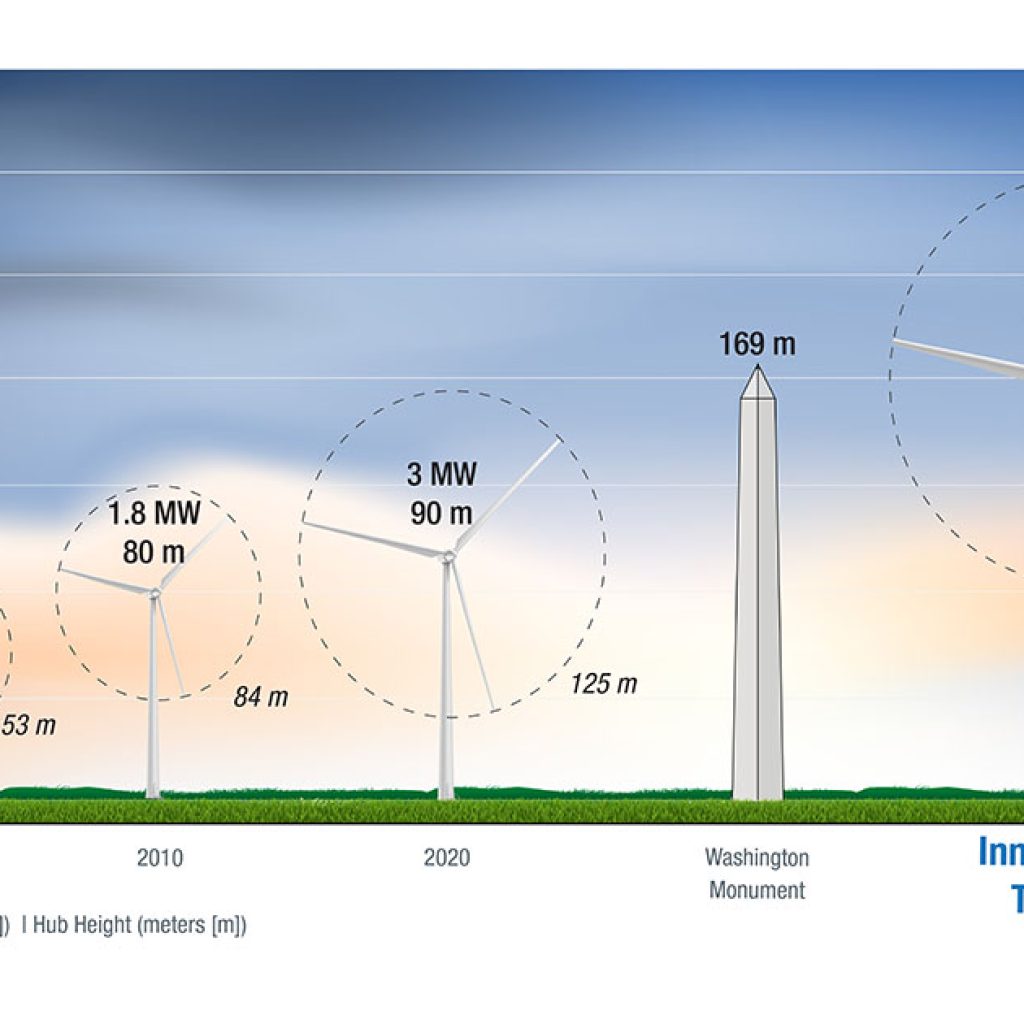

Large Models, Big Demands: LLMs demand substantial memory, communication, and computational resources. Conventional FL methods involve transmitting and training the entire LLM across multiple clients using their local data, introducing complexities related to storage and computational capabilities.

Proprietary LLMs: Proprietary Large Language Models pose challenges as clients do not own them. Allowing federated fine-tuning without accessing the entire model becomes necessary, especially in closed-source LLMs.

Despite challenges, FL holds immense potential to overcome obstacles associated with using LLMs. Collaborative pre-training and fine-tuning enhance LLMs’ robustness, and efficient algorithms address memory, communication, and computation challenges. Designing FL systems specific to LLMs and leveraging decentralized data present exciting opportunities for the future.

Federated learning, the catalyst for LLMs’ full potential

The integration of Federated Learning into the lifespan of Large Language Models holds the promise of collaborative opportunities, efficient training, and innovative solutions to challenges posed by these advanced language models. As organizations navigate the complexities of deploying LLMs, FL stands out as a powerful ally, offering a pathway to overcome hurdles and unlock the enormous potential of these transformative models.

In a world where data privacy, scalability, and adaptability are paramount concerns, Federated Learning emerges as a critical player, ensuring the effectiveness, security, and collaborative evolution of Large Language Models across diverse applications and industries. As technology evolves, FL paves the way for a future where LLMs can truly reach their full potential, revolutionizing how we interact with and benefit from advanced language models.