The upcoming wave of elections around the world is closely followed by worries about AI and cyberattacks affecting the democratic process. According to, Mandiant’s report, commissioned by Google Cloud has revealed a diversity of threats that are usually posed by state actors, cybercriminals, and political hackers to the general public. This may jeopardize the upcoming election processes in many countries around the globe.

Heightened threat landscape

Mandiant’s report accentuates the rising level of election-related threats, underscoring the there is an urgent need for all-inclusive security policies. This year, over 2 billion voters from 50 countries will be on the ballot, making the risks even more real, thus implicating vigilance in ensuring emerging cyber threats cannot be successful.

Chinese state-sponsored cyber threat operations have now adopted artificial intelligence (AI) to power widespread disinformation efforts targeting elections at home and abroad. The latest reports highlight these attackers’ famous strategies, particularly in the elections in Taiwan and the upcoming 2020 US presidential poll.

In Taiwan, adopting AI-generated newscasts that use fake presenters has become a perfect way of spreading fake news. These mimicked news segments, made out from CapCut, ByteDance’s creation, are among the key instruments in the spread of misinformation aimed at disrupting the democratic process. In addition, memes and videos, generated by AI, with the intent to smear up the well-known political figures have been widely distributed resulting in the widespread dissemination of deceitful content.

Just the same, the United States has been under relentless attempts by Chinese state-sponsored threat actors to manipulate the mindset of the country through disinformation campaigns. Election cybersecurity efforts span further than just the typical hacking efforts; these disinformation campaigns aim to undermine the people’s will and the electoral process. Adversaries use every possible approach to manipulate the outcomes, from the targeting of voting machines to the infiltration of political campaigns and social media manipulation.

Comprehensive security imperative

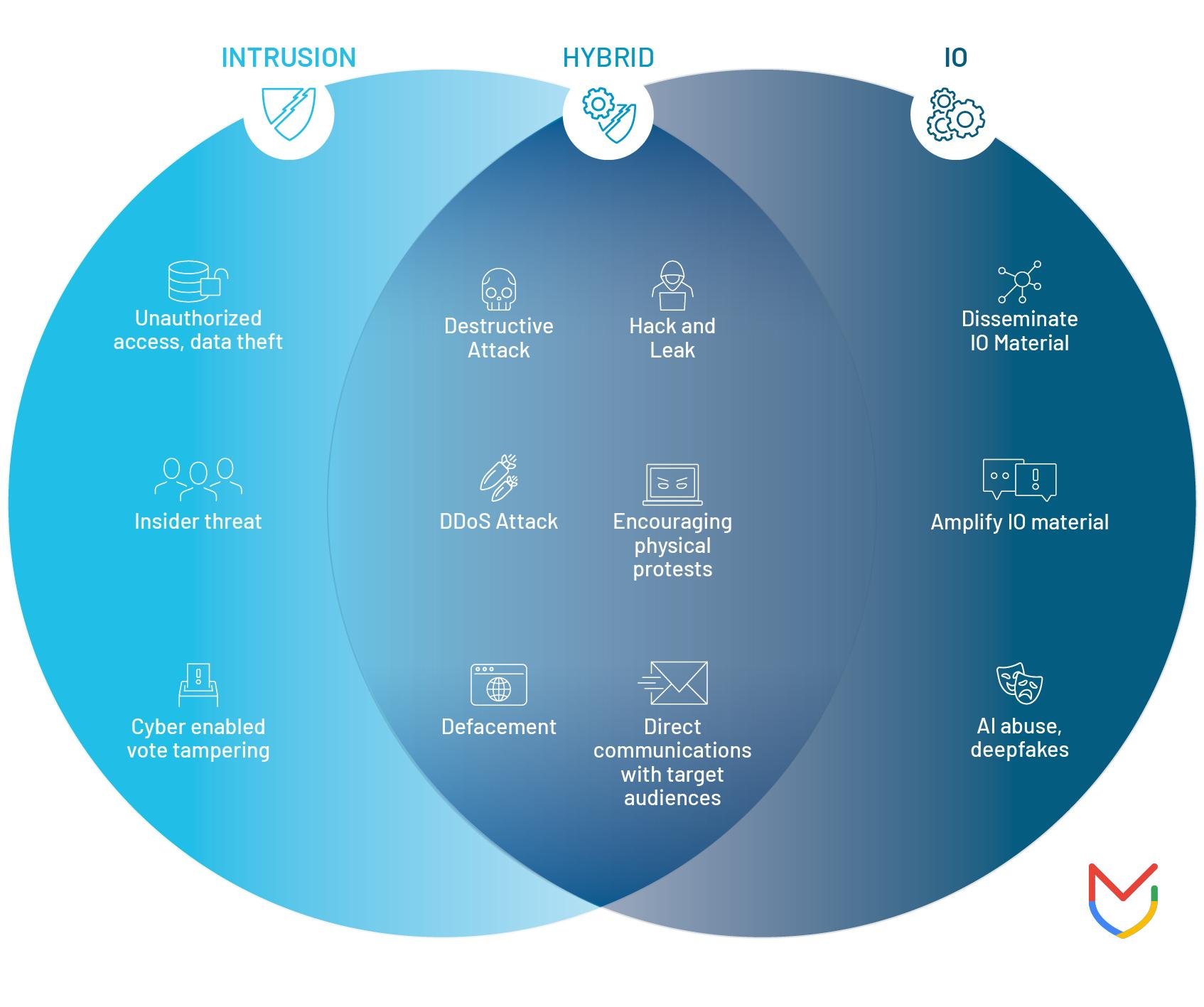

Securing elections will require a holistic approach that spans threat vectors from DDoS attacks to deepfake manipulation. Mandiant puts a lot of emphasis on going ahead with the threats to secure digital infrastructures and minimize electoral manipulations.

Although the most justifiable concerns about the cybersecurity of elections deal with direct disruptions of voting and tallying, much more often, cyber adversaries try to seed public perception with information warfare: spreading fake news, orchestrating DDoS attacks, and manipulating social media with all ingenuity secretly to shape electoral outcomes.

Consequently, there is an expectation of proactive defense measures by electoral organizations to cope with the changing threat landscape. This is to be undertaken with an understanding of specific threats directed at individual countries so as to enhance anticipation and countermeasures against probable attacks that will make democratic institutions more resilient.

US government initiative AI safety and security board

It is worth noting that the US government has created an Artificial Intelligence Safety and Security Board with top tech leaders, showing a great leap. Among the mentioned top tech leaders on the board are OpenAI’s Sam Altman, Microsoft’s Satya Nadella, and Alphabet’s CEO, Sundar Pichai.

The board will, among other things, advise the Department of Homeland Security on how to best infuse AI into the nation’s critical infrastructure in a secure and safe manner. Among others on the high table are Jensen Huang, CEO of Nvidia; Kathy Warden, CEO of Northrop Grumman; and Ed Bastian, CEO of Delta Airlines.

The AI Safety and Security Board will work with the US Department of Homeland Security to eliminate any suggestions for incorporating AI into critical infrastructure that would be dangerous. The Board will provide suggestions to utilities, transportation providers, and manufacturers on how to implement cybersecurity practices that are consistent with the form of AI that is most prevalent at this time.

The principal objective of the AI Safety and Security Board is to safely integrate AI technologies into critical infrastructure systems. That would be the space in which the board hopes to set guidelines and workstations for implementation with a focus on security, safety, and reliability within critical infrastructure. To compile the best ideas from experienced industry executive leaders and develop a framework that allows for responsible deployments of AI across diverse fields.

Involvement of stakeholders from the private sector into that organization also plays a role in showing a combined effort in applying the knowledge and perspectives of the industry in the establishment of AI policies and regulations. In this case, the board is committed to dealing with complex problems that are AI-related in ensuring security and safety, therefore creating confidence and trust among the public.

Artificial intelligence-generated misleading content: Risks and con

AI-generated deepfakes are becoming increasingly sophisticated, making it hard to tell real from manipulated content. In the form of photos, videos, and audio files, manipulated content could carry a threat to the damage of a politician’s or political party’s reputation. It may not be fully exerted in a landslide election, but the power of AI can alter the final results by warping the difference in a few crucial votes in a close contest.

There are global efforts to mitigate the impact that AI manipulation may have on electoral processes. Different governments, the world’s largest tech firms, and legislative bodies are steering this path through monitoring, regulating, and, above all, making sense of the impact of AI. For instance, most countries have established internal election teams and implemented measures to prevent associated risks with generative AI.

The preventive measures to adopt in the prevention of AI-driven election interference include sensitizing the population about the risks of deceptive AI-generated content. Awareness campaigns are designed to sensitize consumers to the genuineness of online content and the very possibility of it being fabricated through AI. That would increase suspicion regarding online information, decreasing even more the trust in digital platforms, with an increased problem regarding disinformation and misinformation.