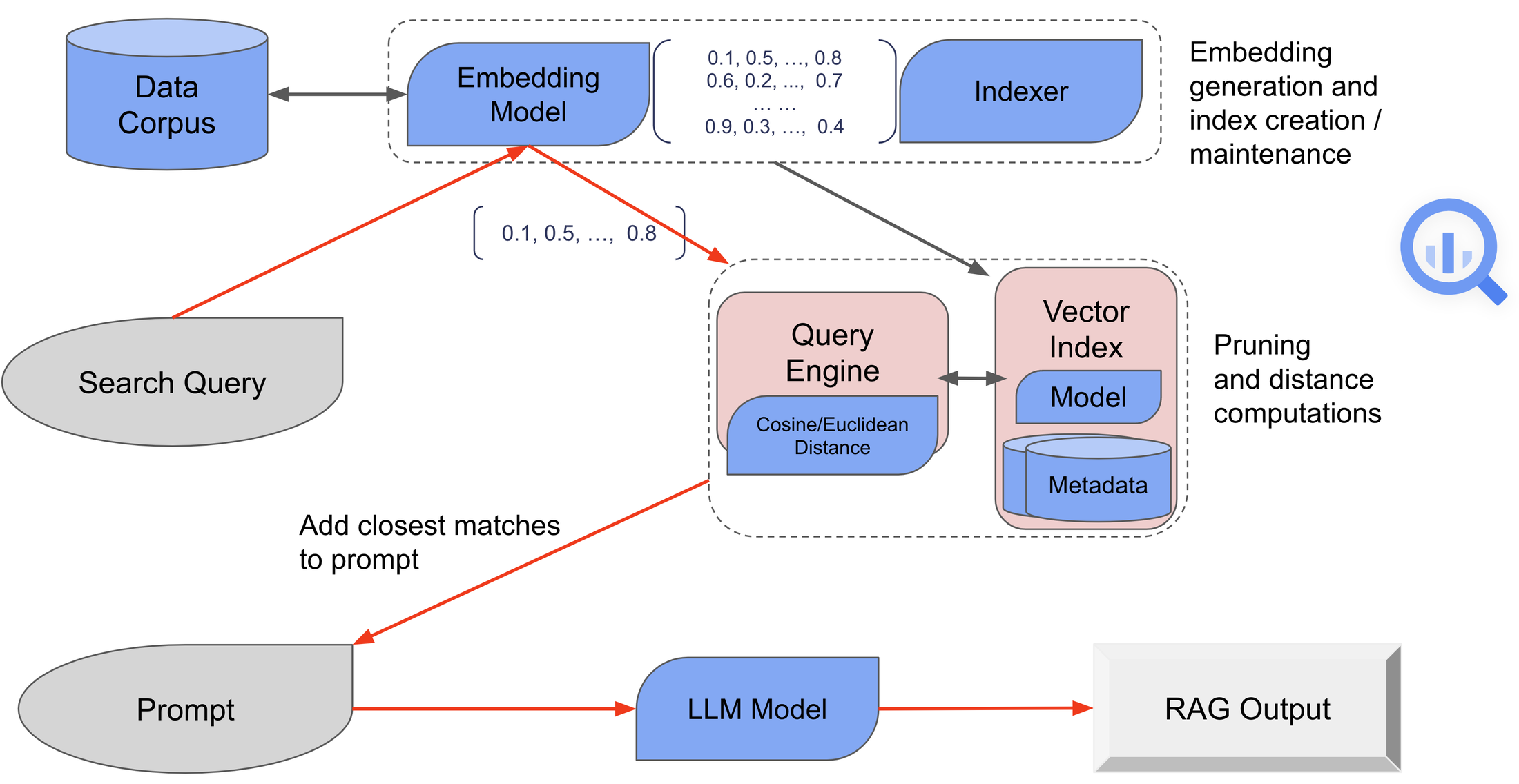

In a groundbreaking move, Google announced the integration of vector search functionality into its BigQuery platform, marking a significant advancement in data and AI capabilities. This latest feature enables users to conduct vector similarity searches, essential for a wide array of data and AI applications such as semantic search, similarity detection, and retrieval-augmented generation (RAG) using large language models (LLMs).

In its preview mode, BigQuery’s vector search enables approximate nearest-neighbor search, a crucial component for various data and AI use cases. The VECTOR_SEARCH function, supported by an optimized index, streamlines the identification of closely matching embeddings through efficient lookups and distance computations.

Automatic index updates and optimization

BigQuery’s vector indexes are automatically updated, ensuring seamless integration with the latest data. The initial implementation, dubbed IVF (Inverted File for Vectors), combines a clustering model with an inverted row locator, creating a two-piece index that optimizes performance.

Google has simplified Python-based integrations with open-source and third-party frameworks by leveraging LangChain. This integration enables developers to incorporate vector search capabilities into their existing workflows seamlessly.

Expanding textual data approaches

Max Ostapenko, a senior product manager at Opera, expressed his excitement about the new feature, stating, “Just got positively surprised trying out vector search with embeddings in BigQuery! We are diving into the world of enhancing product insights with Vertex AI now. It expands your approaches to working with textual data.”

To assist users in leveraging the power of vector search, Google has provided a comprehensive tutorial. Using the Google Patents public dataset as an example, the tutorial demonstrates three distinct use cases: patent search using pre-generated embeddings, patent search with BigQuery embedding generation, and RAG via integration with generative models.

Omid Fatemieh and Michael Kilberry, engineering lead and head of product at Google, respectively, highlight the advanced capabilities of BigQuery, enabling users to extend the search cases into full RAG journeys. Specifically, users can leverage the output from VECTOR_SEARCH queries as context for invoking Google’s natural language foundation (LLM) models via BigQuery’s ML.GENERATE_TEXT function.

Google’s commitment to enhancing BigQuery extends beyond vector search. The cloud provider has announced the availability of Gemini 1.0 Pro for BigQuery customers via Vertex AI. Furthermore, a new BigQuery integration with Vertex AI for text and speech has been introduced.

Billing and pricing

While the introduction of vector search brings enhanced functionality to BigQuery users, it’s essential to note that billing for the CREATE VECTOR INDEX statement and VECTOR_SEARCH function is based on BigQuery compute pricing. For the CREATE VECTOR INDEX statement, only the indexed column is considered for calculating processed bytes, ensuring transparent and predictable user billing.

With the integration of vector search, Google BigQuery continues to push the boundaries of data analytics and AI, empowering users with powerful tools to unlock insights and drive innovation.