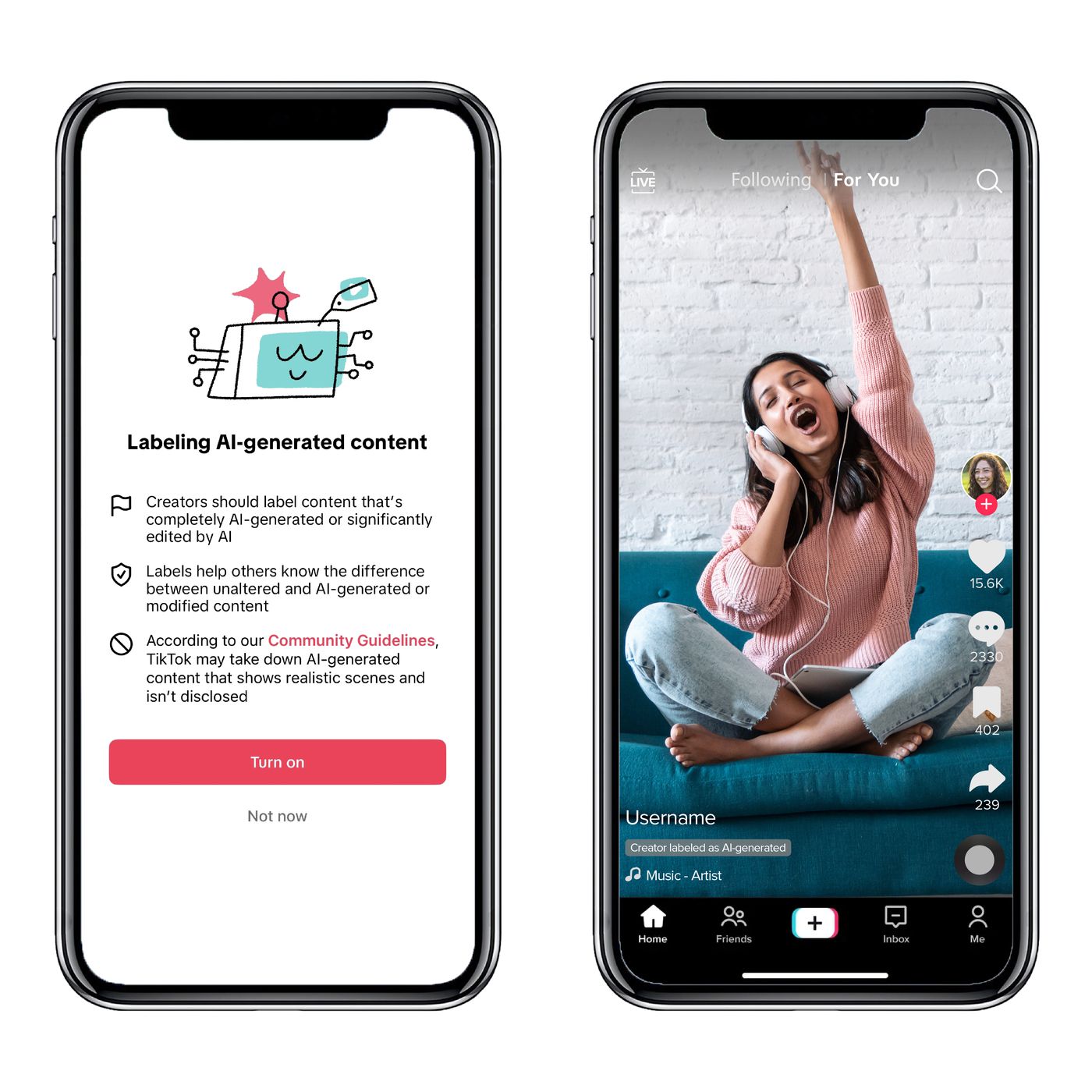

Among all TikTok, the popular short-video app, cases taking active measures to tackle the problematic implications of artificial intelligence (AI)-based content on global elections have emerged in RikTok. The platform sets up an algorithm for automatic labeling of AI-generated content (AIGC), which will be pulled from external platforms. Through this move, information users seek and refer to will come with a clearer background about the kind of content they see on the platform, which will enhance transparency.

The initiative follows Meta’s lead

The most recent update from TikTok, which belongs to Meta Platforms that include Facebook, Instagram, and Threads, might surprise privacy regulators and let them prevent TikTok from spreading rapidly among young people with their secrets uncovered for others even beyond their family circle. However, for Meta, AI labeling and detection of AI-generated images were implemented earlier than external AI systems, which can do the same. Industrial leaders are now more inclined towards the humanization of TikTok’s announcement of the risks involved with the generative AI technology that is being used extensively by deepfakes technology to produce deceptive visual content such as videos that resemble real human characters.

TikTok has worked with the relevant industry players to contribute to the safety of many. The organization’s support for Adobe’s Credentials content proves that the technology helps with content on this platform, protecting it from being altered in any way. Furthermore, the app teamed up with C2PA, Russia’s coalition for content ownership and authenticity, to further its intention of reliability and authenticity.

Enhancing transparency and authenticity

TikTok said in a statement that the public has a right to know whether the content is human-created or has been generated by artificial intelligence, and this makes the need to label AI-generated content extremely vital. A platform is now branding the AI effects that were added to the AIGCs with a label. For more than a year, they have also required the creators to label their AIGC more realistically. As a pivotal step in the entire process, the company has created an all-around accessible application that has been used by millions of content creators since its release.

The enactment of these guidelines throughout the same period correlates very well with the prevailing concerns of AI-derived content mishandling in elections worldwide. The question of whether it is justified to enable content that deceives and misinforms voters has become extremely relevant, again drawing attention to the need to introduce measures to protect democratic processes.

Industry-wide collaboration

TikTok is trying to do fully in line with the larger approaches utilized by senior technology firms to battle the abuse of AI in election meddling. In 2023, among the twenty most advanced technology companies, such as Amazon, Google, Meta, Microsoft, and TikTok, nine have approved acts preserving democracy from AI-influenced elections all over the world. The main measures involved are watermarking and other identification signals that must be applied and used to fight the traceability of AI content.

The digital terrain is constantly in flux, and TikTok, as well as many other media outlets, is no exception – being quick to act and find solutions to the critical and essential problems that may overshadow the image of a media platform is the primary task of our team. By introducing AI labeling of content produced and with close collaboration with the private sector, TikTok is making an effort to develop an environment that is trustworthy and favorable to creators and users. Campaigns are going on in about one thousand places, the AIsup plantation has grown to alarming proportions, and the democratic process supervision is the issue that the world community has to act upon now.