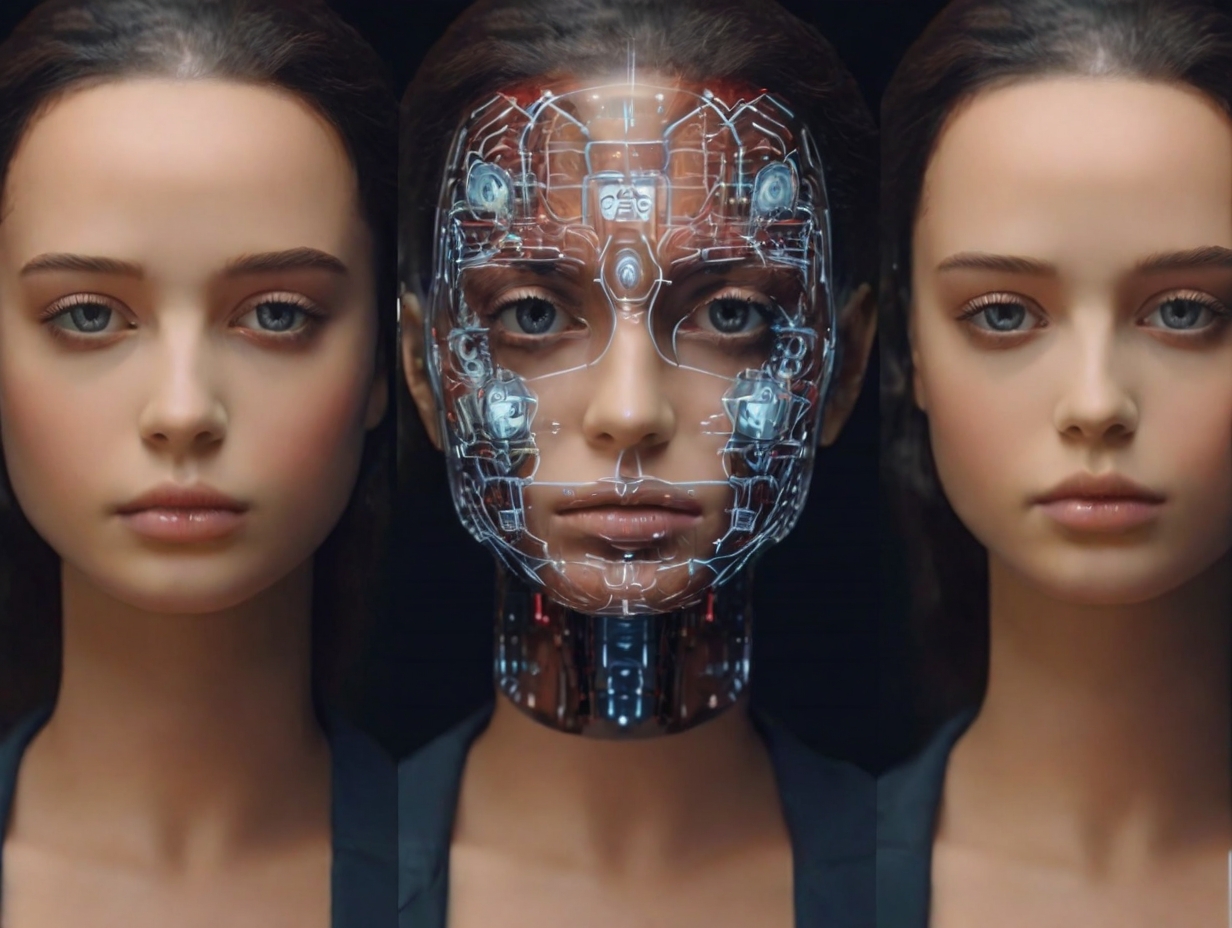

After a scandal involving a deepfake video featuring podcast host Bobbi Althoff, social media platform X is embroiled in controversy over its response to disseminating non-consensual content. The incident has reignited concerns about the platform’s ability to enforce its policies against such material and has sparked a broader conversation about the proliferation of deepfake technology.

Althoff responds to AI-generated deepfake

Bobbi Althoff, host of the popular podcast “The Good Podcast,” became the latest victim of deepfake technology when a sexually explicit video purportedly featuring her circulated widely on social media platform X. In response, Althoff took to Instagram to clarify that the video was AI-generated, vehemently denying involvement. Despite her swift denouncement, the video garnered over 6.5 million views before any significant action was taken.

X platform’s ineffectiveness in curbing deepfake content

The incident involving Althoff underscores the platform’s struggle to effectively combat the spread of non-consensual deepfake videos despite clear policies against such content. Despite X’s stated regulations, the video remained accessible for nearly a day, with new posts continuing to surface. This delay in response has prompted criticism from users and industry experts alike, highlighting the urgent need for better detection and moderation mechanisms.

The rise of deepfake technology has prompted industry leaders to call for heightened regulatory measures to address the proliferation of non-consensual content. Over 800 experts, including presidential candidates, tech CEOs, and prominent figures like Yoshua Bengio, have signed an open letter urging governments to intervene urgently. Concerns about the potential misuse of deepfakes, particularly in political contexts, have further fueled demands for stricter regulations.

In response to growing concerns, OpenAI decided to ban the use of AI for political campaigns and lobbying, signaling a proactive approach to mitigate the risks associated with AI manipulation.

Platform’s response and policy analysis

While X’s policies prohibit the sharing of explicit images or videos without consent, they do not explicitly address deepfake pornography. The platform’s slow and often ineffective response to such content has raised questions about its commitment to enforcing its guidelines. Despite the evident violation of its policies, X’s actions have been perceived as inadequate, fueling frustration among users and advocacy groups.

The difficulty distinguishing between genuine and AI-generated content exacerbates the challenge of detecting and removing deepfake videos promptly. As social media platforms grapple with this issue, there is a growing consensus on the need for enhanced cybersecurity measures and AI detection technologies to safeguard users from malicious manipulation.

The deepfake scandal involving Bobbi Althoff has shed light on the pervasive threat posed by AI-generated content on social media platforms. Despite efforts to combat the spread of non-consensual material, platforms like X face challenges in effectively enforcing their policies.

As concerns about deepfake technology persist, calls for regulatory intervention and improved detection mechanisms have intensified. The incident serves as a stark reminder of the urgent need to address the risks associated with AI manipulation and protect users from exploitation in the digital age.