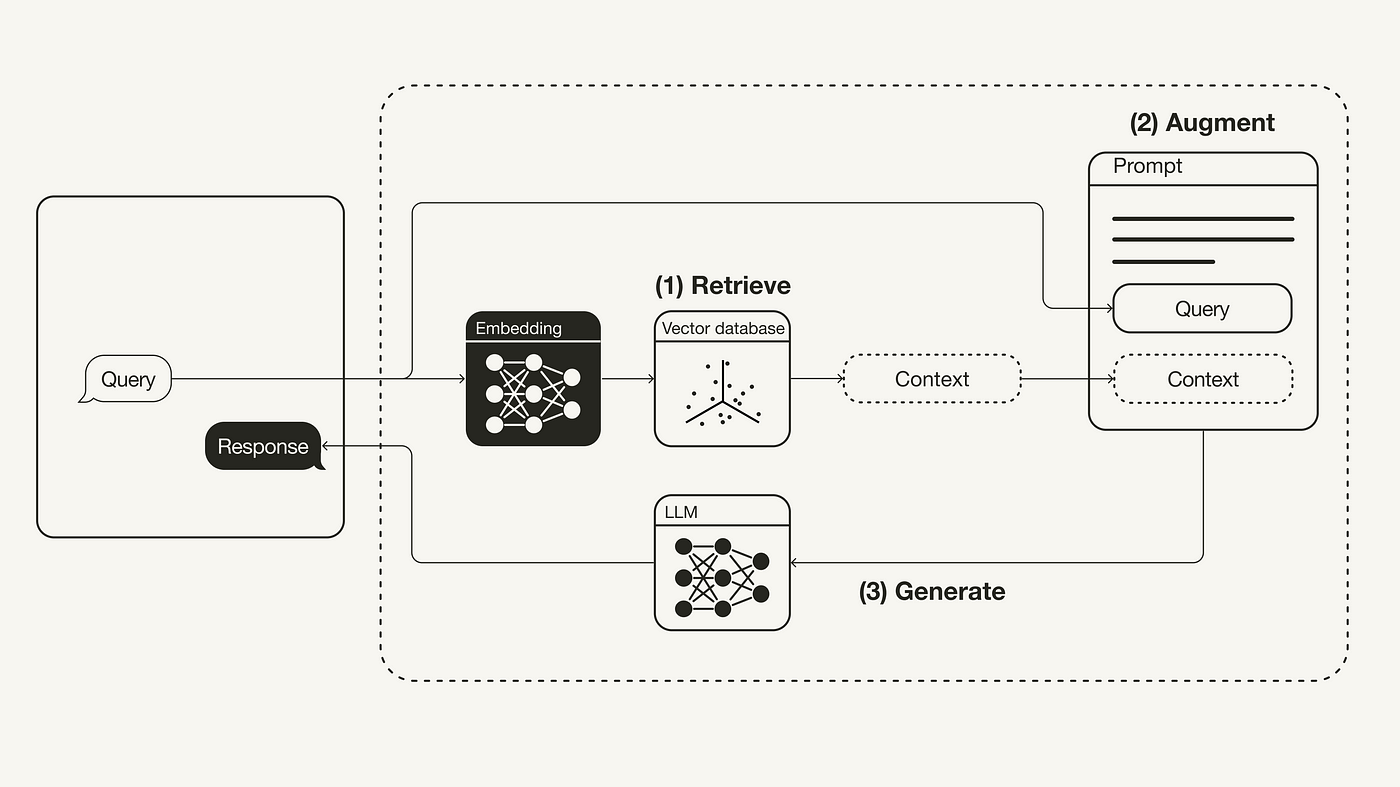

The accuracy and reliability of generative AI models can be improved by the facts obtained from external sources, and the technique used for fetching them is called retrieval-augmented generation (RAG).

For a simple understanding, let’s say that a good large language model (LLM) can answer a wide range of human queries. But for credible answers, there must be some sources cited in them, and for this purpose, it has to do some research, so the model will need an assistant. This assistive process is called retrieval-augmented generation, or RAG, for ease.

Understanding retrieval-augmented generation, or RAG

To further understand RAG, it fills the gaps that are already there in LLMs works. An LLM’s quality or power is measured by the number of parameters it has. Parameters are basically the general patterns of how we humans use words to make sentences. LLMs can be inconsistent with the answers they provide.

Sometimes they give the exact information the user needs, and sometimes they just churn out random facts and figures from the datasets included in their training. If at times, LLMs give vague answers, like they don’t know what they are saying, its because they really have no idea of what they are saying. As we talked of parameters a line above, LLMs can relate words statistically, but they don’t know their meanings.

Integrating RAG in LLM-based chat systems has two main benefits, it makes sure that the model can access the current and reliable facts, and it also ensures that the users can verify that its claims are trustworthy as they have access to the sources of the model.

Director of Language Technologies at IBM Research, Luis Lastras, said,

“You want to cross-reference a model’s answers with the original content so you can see what it is basing its answer on.”

Source: IBM.

There are other benefits also, as it reduces the chances of hallucinations and data leakage as it has the opportunity to base its knowledge on external sources, so it does not have to rely solely on the data it was trained on. RAG also lowers the financial and computational costs of running chatbots as it has less need to be trained on new data.

Benefits of RAG

Traditionally, digital conversation models used a manual dialogue approach. They established their understanding of a user’s intent, then fetched the information on that basis and provided answers in a general script already defined by the programmers. This system was capable of answering simple, straightforward questions. However, the system had limitations.

Delivering answers to every query a customer may potentially ask for was time consuming, if the user missed a step, the chatbot lacked the ability to handle the situation and improvise. However, today’s tech has made it possible for chatbots to provide personalized replies to users without the need to write new scripts by humans, and RAG takes it a step further by retaining the model on fresh content and reducing the need for training. As Lastras said,

“Think of the model as an overeager junior employee that blurts out an answer before checking the facts, experience teaches us to stop and say when we don’t know something. But LLMs need to be explicitly trained to recognize questions they can’t answer.”

Source: IBM.

As we know, users’ questions are not always straightforward, they can be complex, vague, and wordy, or they require information that the model lacks or can’t easily dissect. In such conditions, LLMs can hallucinate. Fine tuning can prevent these instances, and LLMs can be trained to stop when they face such a situation. But it will need to be fed thousands of examples of such questions to recognize them.

RAG is the best model that is currently available to base LLMs on the latest and most confirmable data and also lower the training. RAG is also developing with time and still needs more research to iron out imperfections.

The source for inspiration can be seen here.